Why does that top-ranking podcast sound like it was recorded in a million-dollar studio—when it was actually done in someone’s spare bedroom? How are independent musicians producing tracks that rival big-label releases without touching expensive hardware? The answer isn't talent alone—it's AI. And it's quietly flipping the audio industry on its head.

The rise of AI Audio Enhancers isn’t just an upgrade—it’s a disruption. Valued at $3.25 billion in 2024 and expected to reach $12.5 billion by 2032, this rapidly growing market reflects more than just technological advancement—it signals a power shift. Traditional audio engineers and high-end studios are no longer the gatekeepers of professional sound. With an AI-powered tool, anyone with a laptop and a mic can achieve audio quality once reserved for industry elites. Critics argue it’s replacing human touch with algorithms—but for millions of creators, it’s a long-overdue liberation. The era of audio elitism is ending—and AI is leading the revolution.

How Has AI Audio Enhancer Evolved Throughout History?

The Early Days: From Mechanical Speech to Digital Dreams

The journey of AI Audio Enhancer didn't begin with modern neural networks—it has roots stretching back over 80 years. In 1939, Bell Labs introduced the Voder, perhaps the first-ever attempt at synthetic speech. While primitive by today's standards, this mechanical device represented humanity's first serious attempt to make machines produce human-like sounds.

The real breakthrough came in 1952 when Bell Labs developed Audrey, a system that could recognize spoken digits zero through nine. Though limited to single speakers and requiring clear pronunciation, Audrey laid the groundwork for what would eventually become our modern audio AI enhancer systems.

The 1970s and 1980s saw the development of early filtering techniques using noise floor estimation. These systems could create filters based on audio segments containing only noise, significantly improving perceived audio quality. However, they struggled with sudden sounds like footsteps or door slams—limitations that would persist for decades.

The Digital Revolution: DAWs and Democratization

The early 2000s marked a pivotal moment with the rise of Digital Audio Workstations (DAWs). These tools shifted studio-grade capabilities from professional facilities into homes and bedrooms worldwide. Suddenly, anyone with a computer could access powerful audio editing tools—a democratization that set the stage for today's AI audio enhance revolution.

Companies like iZotope began developing sophisticated audio repair software during this period. Their RX series, which started as manual audio restoration tools, would eventually incorporate AI-powered modules that could automatically identify and fix audio problems. Similarly, Adobe began integrating AI features into their audio software, culminating in tools like Adobe Audition's AI noise reduction capabilities.

The Neural Network Era: Intelligence Meets Audio

The 2010s brought the advent of artificial intelligence and machine learning to audio processing. Neural networks enabled smarter solutions that could track noise statistics more accurately and handle abrupt noises that traditional filtering couldn't manage. This technological leap was integrated into products like Accentize VoiceGate, showcasing remarkable noise reduction performance.

A major milestone came in 2019 when Adobe demonstrated Project Awesome Audio at their MAX conference. This early version of AI-powered audio enhancement showed the potential for neural networks to transform poor-quality recordings into studio-quality audio automatically. The technology behind this demonstration would eventually become Adobe's Enhance Speech feature, now available in Premiere Pro and Adobe Podcast.

Modern AI Audio Enhancer: The Current Landscape

Today's AI Audio Enhancer tools represent the culmination of decades of innovation. Companies like ElevenLabs, which raised $180 million in Series C funding at a $3.3 billion valuation, are pushing the boundaries of what's possible with AI-powered audio processing. Meanwhile, Music.AI raised $40 million in Series A funding, demonstrating the massive investor interest in this space.

The current generation of audio enhancer AI tools can perform complex tasks that would have been impossible just a few years ago. They can separate individual speakers from group conversations with 99% accuracy, remove background noise while preserving natural voice characteristics, and even translate audio content across multiple languages while maintaining emotional tone and speaking style.

What Are the Key Advantages of AI Audio Enhancer Over Traditional Methods?

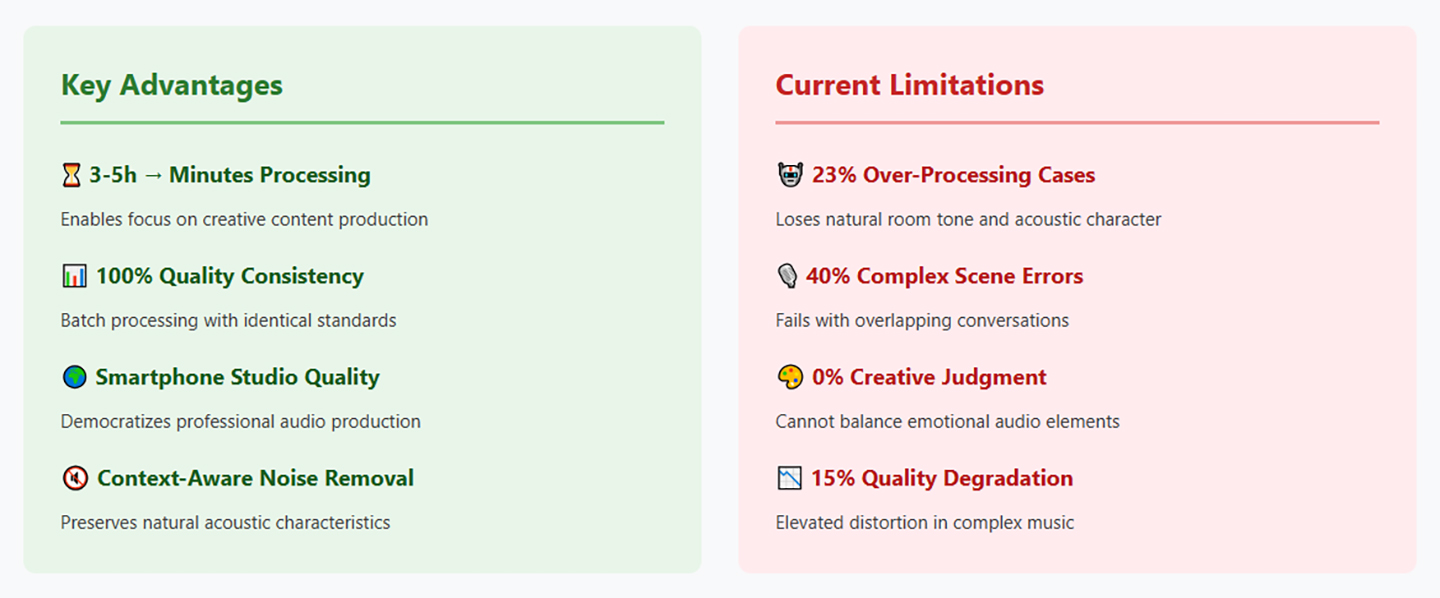

Time Efficiency: From Hours to Minutes

One of the most compelling advantages of AI Audio Enhancer technology is its incredible time efficiency. Traditional audio editing often requires 3-5 hours of post-production work for every hour of recorded content. With AI-powered tools, this ratio can be reduced dramatically, sometimes to just minutes of processing time.

Professional audio engineers who once spent entire days manually removing background noise, adjusting levels, and applying equalization can now accomplish the same tasks in a fraction of the time. This efficiency isn't just about speed—it's about allowing creators to focus on what matters most: their content and creativity.

Consistency at Scale

Human audio engineers, no matter how skilled, will inevitably produce slightly different results from session to session. AI enhance audio systems, however, can maintain consistent quality across thousands of audio files. This consistency is particularly valuable for podcasters, content creators, and businesses that need to process large volumes of audio content regularly.

The ability to process multiple files simultaneously with identical quality standards has revolutionized how organizations approach audio production. Educational institutions can now enhance hundreds of lecture recordings with consistent quality, while media companies can standardize their audio output across different shows and platforms.

Accessibility and Democratization

Perhaps the most significant advantage of AI Audio Enhancer technology is how it has democratized professional-grade audio production. Previously, achieving studio-quality sound required expensive equipment, acoustically treated rooms, and years of training. Now, anyone with a smartphone and internet connection can access AI-powered audio enhancement tools.

This democratization has been particularly transformative for independent creators, small businesses, and educational institutions. Podcasters recording in their bedrooms can achieve sound quality that rivals professional radio stations. Musicians in developing countries can produce tracks that compete with major label releases. The barriers to entry for high-quality audio production have never been lower.

Intelligent Noise Reduction

Traditional noise reduction techniques often resulted in artifacts or loss of audio quality. Audio AI enhancer systems, however, can intelligently distinguish between desired sound and unwanted noise with remarkable accuracy. They can remove constant background hums, reduce room reverb, and minimize clicks and pops while preserving the natural characteristics of the primary audio.

This intelligent processing extends beyond simple noise removal. Modern AI systems can identify and separate different types of audio content—speech, music, ambient sound—and apply appropriate processing to each element. This sophisticated understanding of audio content allows for more nuanced and effective enhancement.

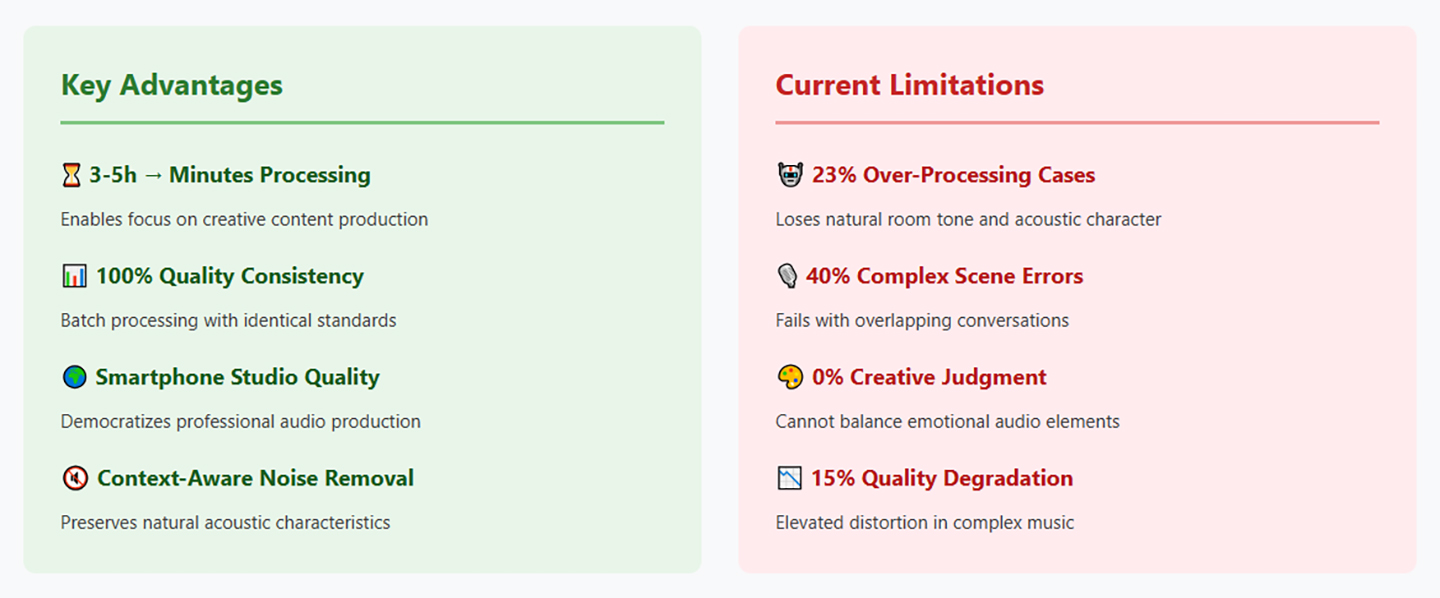

What Are the Current Limitations of AI Audio Enhancer?

The Over-Processing Trap

While AI Audio Enhancer tools are incredibly powerful, they can sometimes be too aggressive in their processing. Inexperienced users might apply too much enhancement, resulting in audio that sounds artificial or "over-processed". This is particularly problematic when AI systems remove too much of the natural room tone or acoustic character that gives audio its organic feel.

The challenge lies in finding the right balance. AI systems are trained to optimize for certain metrics—clarity, noise reduction, loudness—but they may not always preserve the subtle characteristics that make audio feel natural and authentic. This is why human oversight remains crucial, even with the most advanced AI tools.

Context and Complexity Challenges

Despite their sophistication, AI audio enhance systems still struggle with complex audio scenarios. They may have difficulty processing overlapping conversations, chaotic group discussions, or audio with multiple speakers talking simultaneously. In these situations, the AI might make incorrect decisions about which audio elements to enhance and which to suppress.

This limitation becomes particularly apparent in scenarios like live event recordings, where multiple microphones capture overlapping sounds, or in documentary-style interviews where natural conversation flow includes interruptions and cross-talk. Human audio engineers still excel in these complex situations where contextual understanding is crucial.

Creative and Aesthetic Judgment

While AI excels at technical audio processing, it cannot replicate the creative and aesthetic judgment that human audio engineers bring to their work. The decision of how much warmth to add to a voice, whether to preserve a slight room reverb for character, or how to balance multiple elements for emotional impact—these remain uniquely human skills.

Research comparing AI-based mastering to human-engineered approaches has shown that while AI can process audio quickly and consistently, human engineers still surpass AI systems in preserving dynamic range, minimizing distortion, and maintaining sonic clarity, particularly for complex genres like classical and jazz.

Quality Degradation in Complex Scenarios

Studies have revealed that AI Audio Enhancer systems can sometimes introduce artifacts or quality degradation, particularly in complex audio scenarios. AI-based mastering has been shown to manifest elevated distortion levels relative to human-engineered approaches, especially when dealing with intricate musical compositions or challenging acoustic environments.

This limitation is particularly relevant for professional music production, where the subtle nuances of sound quality can make the difference between a good and great recording. While AI tools continue to improve, they still require human oversight to ensure optimal results in critical applications.

How Is AI Audio Enhancer Transforming Different Industries?

Music Production: From Bedroom to Billboard

The music industry has been one of the most dramatically affected by AI Audio Enhancer technology. Independent artists who once couldn't afford professional mastering services can now achieve radio-ready sound quality using AI-powered tools. This democratization has led to an explosion of high-quality music production from bedroom studios worldwide.

However, this transformation isn't without its challenges. The music industry is grappling with concerns about job displacement, as AI tools can now perform many tasks traditionally handled by human audio engineers. Session musicians and sound engineers are finding their traditional roles evolving as AI systems become capable of replicating many of their functions.

The positive impact is equally significant. AI-powered audio enhancement has enabled the restoration of countless vintage recordings, bringing new life to archival material that would otherwise be lost to time. Record labels are using AI to remaster their catalogs, making classic albums sound better than ever on modern playback systems.

Podcasting: The Great Equalizer

The podcasting industry has perhaps benefited most from AI Audio Enhancer technology. With an estimated 120,000 tracks uploaded daily to digital platforms, content creators need tools that can help them stand out in an increasingly crowded market. AI-powered audio enhancement has become essential for podcasters who want to compete with professionally produced content.

Tools like Podsqueeze, Cleanvoice AI, and Adobe Podcast have revolutionized how podcasters approach audio production. These platforms can automatically remove background noise, eliminate filler words, normalize audio levels, and even generate transcripts—tasks that would previously require hours of manual work.

The transformation has been particularly beneficial for educational and corporate podcasting. Organizations can now produce high-quality audio content without hiring professional audio engineers, making podcasting accessible to businesses, schools, and non-profit organizations that previously couldn't afford professional audio production.

Film and Television: Enhanced Storytelling

The film and television industry has embraced AI audio enhance technology for dialogue cleanup, noise reduction, and sound design. AI-powered tools can now isolate dialogue from noisy environments, remove unwanted background sounds, and enhance speech clarity—capabilities that are particularly valuable for documentary filmmaking and on-location recording.

Post-production teams are using AI to accelerate their workflows, processing rough cuts with AI enhancement before applying final human polish. This hybrid approach allows for faster turnaround times while maintaining the creative control that human engineers provide.

The technology has also enabled new creative possibilities. AI can now generate realistic voice synthesis for characters, create immersive soundscapes, and even assist with foreign language dubbing by maintaining the original actor's vocal characteristics while translating the dialogue.

Telecommunications: Clearer Connections

The telecommunications industry has integrated AI Audio Enhancer technology to improve call quality and reduce background noise in voice communications. Modern smartphone systems use spatially informed neural networks to separate voice from background noise and echo, delivering high-quality conversations even in challenging acoustic environments.

This technology has become particularly important as remote work has increased demand for clear, professional-sounding voice communication. AI-powered noise cancellation and speech enhancement have become standard features in video conferencing platforms, helping maintain communication quality regardless of users' recording environments.

Healthcare: Telemedicine and Beyond

The healthcare industry has found innovative applications for AI Audio Enhancer technology, particularly in telemedicine. AI-powered speech enhancement ensures high-quality voice communication between healthcare providers and patients, even in noisy or remote environments. This capability has been crucial for expanding access to healthcare services, particularly in underserved areas.

AI audio enhancement is also being used to improve the accuracy of medical transcription, helping healthcare providers document patient interactions more effectively. The technology can enhance audio quality in medical recordings, making it easier for transcription services to produce accurate documentation.

What Ethical Concerns Does AI Audio Enhancer Raise?

Copyright and Intellectual Property Challenges

One of the most significant ethical challenges facing AI Audio Enhancer technology relates to copyright and intellectual property rights. Many AI systems are trained on vast datasets that include copyrighted material, often without explicit permission from the original creators. This practice has led to high-profile lawsuits, including cases where major record labels have sued AI companies for allegedly using copyrighted music without authorization.

The core issue is whether using copyrighted works to train AI models constitutes fair use or copyright infringement. Supporters argue that this training should be protected under fair use, citing precedents like the Google Books project. However, critics point out that the commercial nature of AI development and the extensive data scraping involved complicate these fair use arguments.

Voice Cloning and Deepfake Concerns

The capability of AI audio enhance systems to clone voices has raised serious concerns about fraud and misinformation. Voice cloning technology can now create realistic synthetic speech from just a few seconds of audio, enabling bad actors to impersonate others for fraudulent purposes.

High-profile cases have demonstrated the potential for abuse. Cybercriminals have used voice clones to trick employees into transferring money, convinced that they were speaking to company executives. The technology has also been used to create fake celebrity endorsements and manipulate public opinion.

The implications extend beyond individual fraud to broader concerns about information integrity. As voice cloning technology becomes more sophisticated and accessible, distinguishing between authentic and synthetic audio becomes increasingly difficult, potentially undermining trust in audio evidence and communications.

Job Displacement and Economic Impact

The automation capabilities of AI Audio Enhancer technology have raised concerns about job displacement in the audio industry. Traditional roles such as sound engineers, mastering engineers, and audio technicians may be at risk as AI systems become capable of performing many tasks previously requiring human expertise.

The International Monetary Fund has reported that AI will impact 40% of global jobs, with many roles at risk of replacement. In the audio industry, this transformation is already underway. Session musicians, audio engineers, and other audio professionals are finding their traditional roles evolving as AI tools become more capable.

However, the impact isn't uniformly negative. The technology is also creating new opportunities in AI management, data analysis, and human-AI collaborative roles. The key challenge is ensuring that workers have access to retraining and adaptation opportunities as the industry evolves.

Authenticity and Artistic Integrity

The use of AI Audio Enhancer technology raises fundamental questions about authenticity and artistic integrity. When AI systems can generate music, enhance vocals, or create entirely synthetic audio content, the line between human and machine creativity becomes blurred.

This challenge is particularly acute in the music industry, where questions about the ownership and attribution of AI-generated content remain unresolved. If an AI system creates a melody based on patterns learned from thousands of existing songs, who owns the rights to that melody? How do we ensure that original artists receive appropriate credit and compensation?

The risk of homogenization is another concern. As AI systems rely on existing data to generate new content, there's a possibility that AI-enhanced audio might become formulaic or derivative, potentially stifling creative innovation and diversity in audio content.

How Can We Responsibly Harness the Power of AI Audio Enhancer?

Addressing Industry Disruption Through Adaptation

The key to managing the disruptive impact of AI Audio Enhancer technology lies in proactive adaptation rather than resistance. Industries affected by AI audio enhancement need to develop strategies that embrace the technology while protecting workers and maintaining quality standards.

For the music industry, this means creating new roles that combine human creativity with AI capabilities. Instead of replacing sound engineers, AI tools can augment their abilities, allowing them to focus on creative and strategic aspects of audio production while letting AI handle routine technical tasks. This hybrid approach preserves human expertise while leveraging AI efficiency.

Educational institutions and industry organizations should collaborate to develop training programs that equip audio professionals with the skills needed to work alongside AI systems. This includes understanding how to prompt AI tools effectively, knowing when human intervention is necessary, and developing new creative applications for AI-enhanced audio.

Establishing Ethical Guidelines and Standards

The audio industry needs to establish clear ethical guidelines for AI audio enhance technology use. This includes developing standards for consent when using voice data, ensuring transparency about AI involvement in audio production, and creating mechanisms for fair compensation to original creators whose work contributes to AI training datasets.

Industry associations should work with policymakers to develop regulations that balance innovation with protection of creators' rights. This might include requirements for disclosure when AI enhancement is used, standards for obtaining consent for voice cloning, and mechanisms for compensating artists whose work is used in AI training.

Companies developing AI audio enhancement tools should implement safeguards against misuse, including voice verification systems, usage monitoring, and clear policies prohibiting fraudulent applications. The goal should be to enable beneficial uses of the technology while preventing harmful applications.

Fostering Human-AI Collaboration

The future of AI Audio Enhancer technology lies not in replacing human creativity but in augmenting it. The most successful applications will be those that combine AI efficiency with human judgment, creativity, and emotional intelligence.

Audio professionals should view AI as a powerful tool that can handle routine tasks and provide new creative possibilities, rather than as a threat to their livelihoods. This perspective shift requires education and training, but it also opens up exciting new possibilities for creative expression.

For content creators, the key is learning to use AI tools effectively while maintaining their unique voice and creative vision. This might involve using AI for technical enhancement while preserving human input for creative decisions, or finding innovative ways to combine AI capabilities with human creativity.

Ensuring Accessibility and Fairness

As AI Audio Enhancer technology becomes more prevalent, it's crucial to ensure that its benefits are accessible to creators from all backgrounds and economic circumstances. This means developing affordable or free AI audio enhancement tools, providing education and training resources, and preventing the technology from exacerbating existing inequalities in the audio industry.

The democratizing potential of AI audio enhancement should be preserved and expanded. Independent creators, small businesses, and educational institutions should have access to the same quality of audio enhancement tools as major corporations and professional studios.

Building Transparency and Trust

To address concerns about authenticity and manipulation, the industry should develop standards for transparency in AI audio enhancement. This might include watermarking AI-enhanced audio, providing clear disclosure when AI tools are used, and developing detection technologies that can identify AI-generated or enhanced content.

Building trust requires ongoing dialogue between technology developers, content creators, and consumers. Regular discussion about the capabilities and limitations of AI audio enhancement can help manage expectations and ensure that the technology is used responsibly.

FAQs

Q: What exactly is an AI Audio Enhancer?

A: An AI Audio Enhancer is a software tool that uses artificial intelligence and machine learning algorithms to automatically improve audio quality. It can reduce background noise, enhance speech clarity, normalize volume levels, and apply various audio processing techniques without requiring manual adjustment of multiple parameters.

Q: How does AI Audio Enhancer differ from traditional audio editing software?

A: Traditional audio editing requires manual adjustment of numerous parameters and extensive technical knowledge. AI Audio Enhancer tools can analyze audio input automatically and make intelligent decisions about processing, making professional-grade audio enhancement accessible to users without extensive technical expertise.

Q: Can AI Audio Enhancer completely replace human audio engineers?

A: While AI Audio Enhancer tools are highly capable, they cannot completely replace human audio engineers. AI excels at technical processing and routine tasks, but human engineers still provide crucial creative judgment, aesthetic decision-making, and the ability to handle complex scenarios that require contextual understanding.

Q: What industries benefit most from AI Audio Enhancer technology?

A: The technology benefits multiple industries including music production, podcasting, film and television, telecommunications, healthcare (telemedicine), gaming, and education. Any industry that relies on clear, high-quality audio communication can benefit from AI enhancement tools.

Q: Are there any risks associated with AI Audio Enhancer technology?

A: Yes, there are several risks including potential job displacement in the audio industry, concerns about voice cloning and deepfakes, copyright issues related to AI training data, and the risk of over-processing audio content. These challenges require careful consideration and responsible implementation.

Q: How can content creators get started with AI Audio Enhancer tools?

A: Content creators can start by identifying their specific audio needs, researching available AI enhancement tools, and beginning with user-friendly options that offer preset configurations. Many platforms offer free trials or basic free versions, allowing creators to experiment before committing to paid solutions.

Conclusion

The AI Audio Enhancer revolution represents one of the most significant technological shifts in the audio industry's history. From its humble beginnings with Bell Labs' mechanical speech synthesis to today's sophisticated neural networks, AI has fundamentally transformed how we create, process, and experience audio content.

The numbers tell a compelling story: a market growing from $3.25 billion in 2024 to a projected $12.5 billion by 2032, with an 18.34% compound annual growth rate. But beyond the statistics lies a deeper transformation—the democratization of professional-grade audio production and the emergence of new creative possibilities.

We've seen how AI audio enhance technology offers unprecedented advantages in time efficiency, consistency, and accessibility. These tools have leveled the playing field, allowing independent creators to compete with professional studios and enabling organizations of all sizes to produce high-quality audio content.

However, we must also acknowledge the challenges and limitations. From the risk of over-processing to concerns about job displacement and ethical issues surrounding copyright and voice cloning, the AI audio enhancement revolution brings both opportunities and responsibilities.

The path forward requires thoughtful balance. We need to embrace the innovation and efficiency that AI Audio Enhancer technology provides while preserving the human creativity, judgment, and authenticity that make audio content truly compelling. This means developing new skills, creating ethical guidelines, and fostering collaboration between human creators and AI systems.

The future belongs to those who can effectively combine the power of AI with human creativity. Whether you're a podcaster, musician, filmmaker, or content creator, understanding and responsibly using AI Audio Enhancer technology will be crucial for success in the evolving audio landscape.

As we move forward, the question isn't whether AI will transform the audio industry—it already has. The question is how we'll shape that transformation to benefit creators, consumers, and society as a whole. By approaching this technology with both enthusiasm and responsibility, we can ensure that the AI audio enhancement revolution enhances rather than diminishes the rich tapestry of human creative expression.

The sound of the future is being written today, and it's a collaboration between human creativity and artificial intelligence. For those ready to embrace this partnership, the possibilities are limitless.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!