Imagine this: You’re a quality assurance engineer who just saw an AI testing tool generate 10,000 test cases, while you only had to write 50. Your first reaction might be one of wonder, followed by a vague fear. Welcome to the brave new world of AI testing, where machines are increasingly taking over one of the most critical functions in software development—and we may be celebrating too early.

The AI testing revolution isn’t just knocking on our door; it’s already moved in and rearranged our furniture. As witnesses to the rapid development of AI in quality assurance, we’ve seen how AI testing has transformed from a promising concept to a multi-billion dollar industry, reshaping the way we approach software quality. But there’s a controversial fact that no one wants to admit: Our rush to embrace AI testing may be causing more problems than it solves.

How Did We Get Here? The Evolution of AI Testing

The Humble Beginnings: When AI Testing Was Just a Dream

Let me take you back to the early 2000s, when AI Testing was nothing more than a theoretical concept discussed in research papers. Back then, testing was a predominantly manual process, with QA teams spending countless hours clicking through applications, documenting bugs, and writing test cases by hand. The idea of machines performing these tasks seemed as far-fetched as flying cars.

The first glimpse of what would become AI Testing emerged through simple automation frameworks like Selenium, which launched in 2004. While not truly "intelligent," these tools introduced the concept of machines executing repetitive test scenarios. However, calling this AI Testing would be like calling a calculator artificial intelligence – it was automation, not intelligence.

The Game Changers: Key Turning Points That Shaped AI Testing

The real revolution began around 2010 when machine learning algorithms started becoming sophisticated enough to handle pattern recognition tasks. Companies like Applitools pioneered visual AI testing, introducing the concept of machines that could "see" and identify visual bugs that human testers might miss. This wasn't just an incremental improvement; it was a fundamental shift in how we approached test AI methodologies.

But the watershed moment came in 2016 when Google's DeepMind defeated world champion Go player Lee Sedol. Suddenly, the tech world realized that AI could handle complex, strategic thinking – exactly what comprehensive testing requires. This breakthrough opened the floodgates for AI test generators and intelligent testing platforms.

Mabl, founded in 2017, introduced self-healing tests that could adapt to application changes without human intervention. Test.AI (later acquired by Microsoft) developed computer vision-based testing that could interact with applications like humans do. These weren't just tools; they were harbingers of an AI Testing future that would fundamentally challenge traditional QA roles.

The Modern AI Testing Landscape: Where We Stand Today

Today's AI Testing ecosystem is a far cry from those early automation scripts. Modern AI Testing platforms leverage natural language processing to convert user stories into executable test cases, use computer vision to validate user interfaces across different devices, and employ machine learning algorithms to predict where bugs are most likely to occur.

The core technologies driving contemporary AI Testing include:

Neural Networks for Pattern Recognition: These systems can identify anomalies in application behavior that traditional rule-based testing might miss. Think of it as having a testing assistant that never gets tired and can spot patterns across millions of data points.

Natural Language Processing (NLP): Modern AI Testing tools can now understand requirements written in plain English and automatically generate corresponding test scenarios. It's like having a translator that speaks both human and machine language fluently.

Computer Vision: AI Testing systems can now "see" applications the way humans do, identifying visual elements, layout issues, and user interface problems across different browsers and devices.

Predictive Analytics: By analyzing historical data, AI Testing platforms can predict which parts of an application are most likely to fail, allowing teams to focus their testing efforts where they're needed most.

What Are the Real Advantages and Limitations of AI Testing?

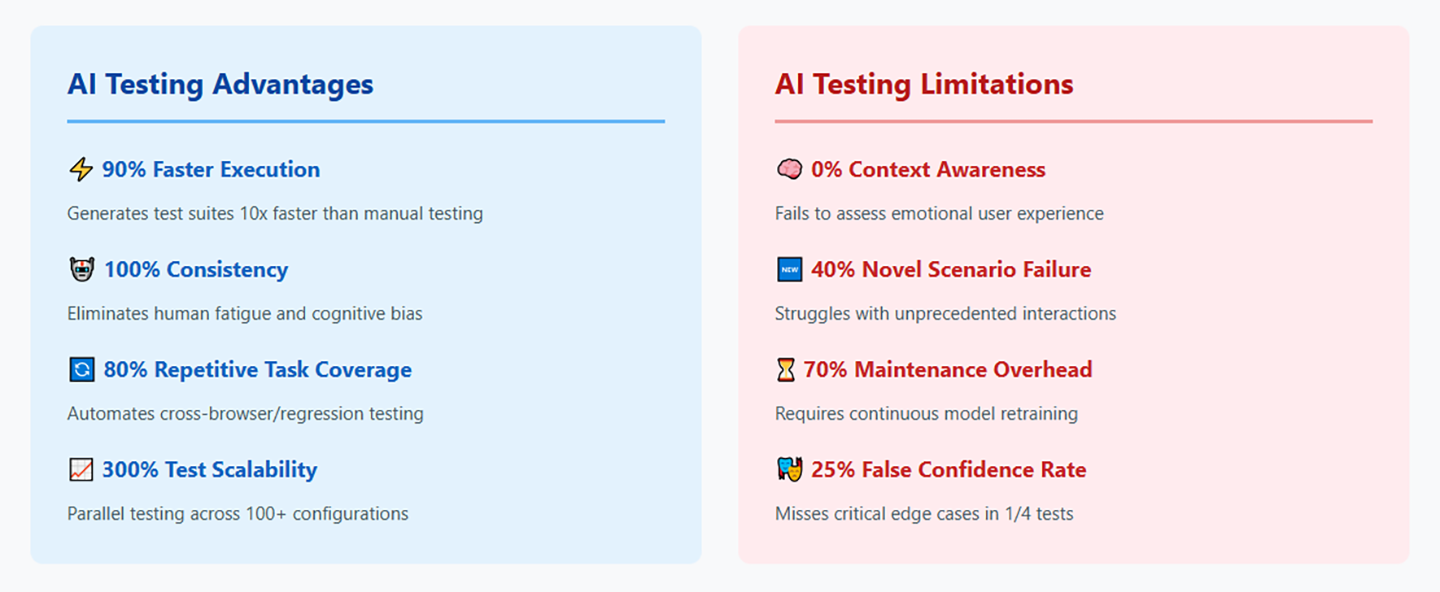

The Undeniable Strengths: Where AI Testing Excels

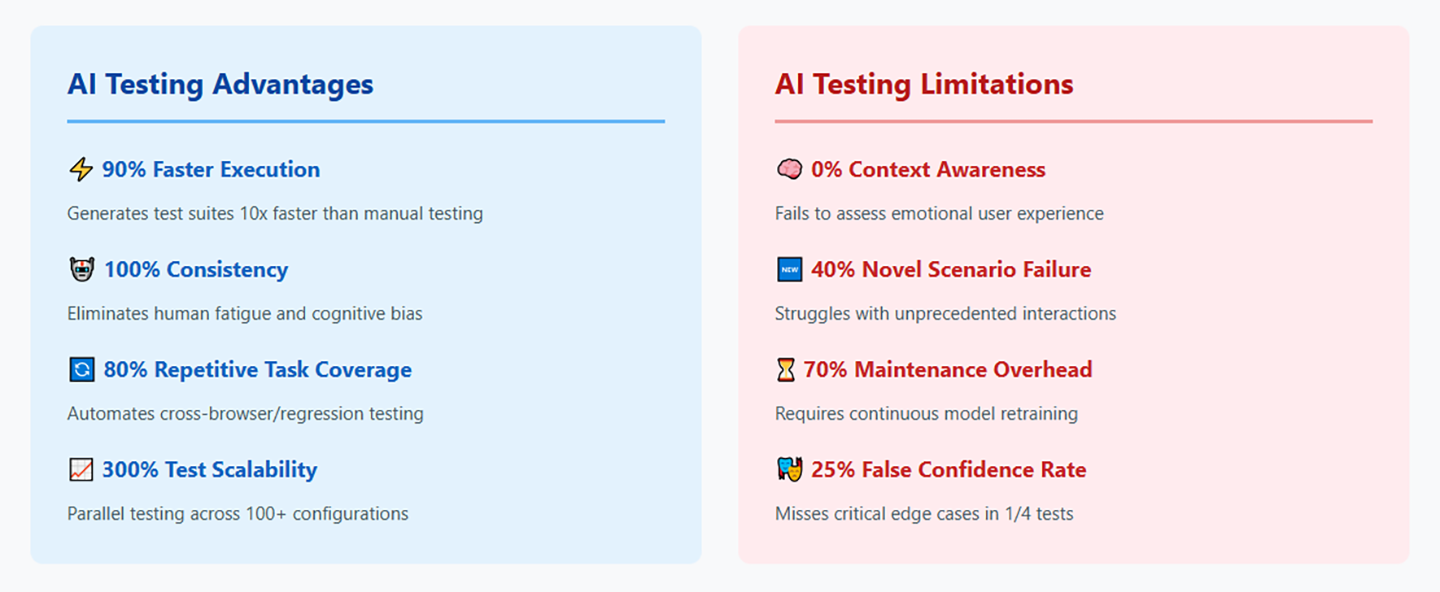

Let's be honest about where AI Testing truly shines. The speed advantage is undeniable – what takes human testers days can be accomplished in hours or even minutes. I've seen AI test generators create comprehensive test suites for complex applications in a fraction of the time it would take experienced QA engineers.

The consistency factor is equally impressive. Human testers, no matter how skilled, can have off days, miss obvious bugs due to fatigue, or unconsciously skip certain scenarios. AI Testing systems don't have bad days, don't get distracted by personal problems, and don't suffer from the inherent biases that affect human judgment.

Moreover, AI Testing excels at handling repetitive tasks that frankly bore human testers to tears. Cross-browser compatibility testing, regression testing, and data validation across multiple environments – these are perfect use cases for AI Testing because they require patience and precision rather than creativity and intuition.

The scalability aspect is perhaps most compelling. A single AI Testing platform can simultaneously test applications across hundreds of different configurations, something that would require an army of human testers and an astronomical budget.

The Uncomfortable Truth: Where AI Testing Falls Short

Here's where things get controversial. Despite all the marketing hype, AI Testing has significant limitations that industry evangelists conveniently gloss over. The most glaring issue is context understanding. AI Testing systems are excellent at following patterns but terrible at understanding the "why" behind user behavior.

Consider this scenario: an AI Testing system might perfectly validate that a checkout process works technically, but it can't understand whether the user experience is frustrating, confusing, or emotionally satisfying. It can't tell you if the color scheme makes users feel anxious or if the navigation flow feels intuitive to first-time users.

Another critical limitation is the test for AI systems' inability to handle truly novel scenarios. AI Testing tools are trained on historical data and known patterns. When faced with completely new features or unprecedented user interactions, they often fail spectacularly. They're like students who excel at standardized tests but struggle with open-ended creative problems.

The maintenance overhead is another dirty secret of AI Testing. These systems require constant training, monitoring, and adjustment. When applications change significantly, AI Testing models often need to be retrained from scratch – a process that can be more time-consuming than writing traditional test cases.

Perhaps most concerning is the false confidence problem. AI Testing systems can generate thousands of test cases that look impressive but may miss critical edge cases that human testers would intuitively explore. They create an illusion of comprehensive coverage while potentially leaving significant gaps in actual test quality.

How Is AI Testing Reshaping Industries?

The Positive Disruption: Industries Embracing AI Testing

The financial services sector has been among the early adopters of AI Testing, and for good reason. Banks and fintech companies deal with complex regulations, multiple integration points, and zero tolerance for bugs that could affect customer money. AI Testing has enabled these organizations to achieve compliance testing at scale while reducing the risk of human error in critical financial calculations.

Healthcare technology companies have similarly embraced AI Testing for validating medical devices and health management systems. The ability to test AI systems against vast datasets of patient information (properly anonymized, of course) has accelerated the development of life-saving medical technologies.

E-commerce platforms have leveraged AI Testing to handle the complexity of modern online shopping experiences. With millions of product combinations, payment methods, and user journeys, human testing alone would be insufficient to ensure quality across all scenarios.

The Darker Side: Industries Under Threat

But let's talk about the elephant in the room – job displacement. Traditional QA roles are being systematically eliminated as AI Testing becomes more sophisticated. Junior testers, whose primary responsibilities included executing repetitive test cases and documenting bugs, are finding their skills increasingly obsolete.

The impact isn't limited to entry-level positions. Mid-level QA engineers who specialized in test case creation and execution are also facing pressure as AI test generators become more capable. Companies are realizing they can achieve similar coverage with fewer human resources, leading to widespread layoffs in QA departments.

Small testing consultancies are particularly vulnerable. Why hire a team of human testers when an AI Testing platform can deliver results faster and cheaper? The economics are brutal and unforgiving.

Educational institutions are struggling to keep pace. Traditional QA curricula are becoming obsolete faster than they can be updated, leaving graduates unprepared for an AI Testing-dominated job market.

What Are the Ethical Implications We're Ignoring?

The Accountability Crisis: Who's Responsible When AI Testing Fails?

Here's a scenario that keeps me up at night: an AI Testing system validates a medical device's software, giving it a clean bill of health. The device later malfunctions, causing patient harm. Who's liable? The AI Testing vendor? The healthcare company? The engineers who configured the system? The regulatory bodies that approved AI Testing for medical applications?

This accountability crisis extends beyond healthcare. When AI Testing systems miss critical security vulnerabilities that lead to data breaches, the legal and ethical implications become murky. Traditional testing created clear chains of responsibility – human testers could be held accountable for their decisions and oversights. AI Testing creates a diffusion of responsibility that could have serious legal consequences.

The Black Box Problem: Understanding AI Testing Decisions

Most AI Testing systems operate as "black boxes," making decisions through complex neural networks that even their creators don't fully understand. When an AI Testing system determines that a particular component is "low risk" and recommends reduced testing, can we trust that decision without understanding the underlying logic?

This opacity becomes particularly problematic when AI Testing systems exhibit bias. If an AI test generator consistently under-tests features primarily used by certain demographic groups, it could perpetuate or amplify existing inequalities in software quality.

The Data Privacy Minefield

AI Testing systems require vast amounts of data to function effectively. This often includes sensitive user information, proprietary business logic, and confidential system architectures. The concentration of this data in AI Testing platforms creates attractive targets for cybercriminals and raises serious questions about data sovereignty and privacy protection.

Moreover, many AI Testing platforms are cloud-based services operated by third-party vendors. This means sensitive testing data is often stored and processed outside the organization's direct control, creating additional security and compliance risks.

How Should We Navigate This AI Testing Future?

Rethinking Our Approach: A Hybrid Strategy

The solution isn't to abandon AI Testing entirely or embrace it unconditionally. Instead, we need a more nuanced approach that leverages the strengths of both human intelligence and artificial intelligence.

For Displaced QA Professionals: The key is evolution, not resistance. Focus on developing skills that complement AI Testing rather than compete with it. This includes user experience testing, accessibility validation, security testing, and test strategy development – areas where human judgment remains irreplaceable.

Domain Expertise Development: Become the person who understands both the technical capabilities of AI Testing and the business context in which it operates. Companies will always need professionals who can bridge the gap between AI Testing capabilities and real-world requirements.

AI Testing Oversight: Develop expertise in monitoring, validating, and interpreting AI Testing results. Someone needs to ensure these systems are working correctly and making appropriate decisions.

Addressing the Ethical Concerns: A Framework for Responsible AI Testing

Transparency Requirements: Advocate for AI Testing systems that can explain their decisions in human-understandable terms. If an AI Testing platform recommends a particular testing approach, it should be able to articulate the reasoning behind that recommendation.

Bias Detection and Mitigation: Implement regular audits of AI Testing systems to identify and correct biases. This includes ensuring that test coverage is equitable across different user groups and use cases.

Data Governance: Establish clear policies for how testing data is collected, stored, and used by AI Testing systems. This includes data minimization principles, explicit consent for data usage, and regular security assessments.

Human Oversight: Maintain human review processes for critical AI Testing decisions. While AI Testing can handle routine tasks autonomously, important decisions about risk assessment and test strategy should always involve human judgment.

Liability Frameworks: Work with legal and regulatory bodies to establish clear accountability frameworks for AI Testing failures. This includes insurance requirements, audit trails, and incident response procedures.

Building a Sustainable Future: Collaboration Over Competition

Rather than viewing AI Testing as a threat to human testers, we should position it as a powerful tool that amplifies human capabilities. The most successful organizations will be those that find ways to combine AI Testing efficiency with human creativity and judgment.

This means investing in training programs that help existing QA professionals transition to AI Testing-augmented roles. It means developing new educational curricula that prepare future testers for an AI Testing world. And it means creating industry standards that ensure AI Testing systems are developed and deployed responsibly.

FAQs

Q: Will AI Testing completely replace human testers?

A: While AI Testing will automate many routine testing tasks, human testers will remain essential for strategic thinking, user experience validation, and complex problem-solving. The role will evolve rather than disappear entirely.

Q: How accurate are current AI Testing systems?

A: AI Testing systems excel at pattern recognition and repetitive tasks but struggle with novel scenarios and contextual understanding. Their accuracy depends heavily on the quality of training data and the specific use case.

Q: What are the biggest risks of implementing AI Testing?

A: Key risks include over-reliance on automated systems, false confidence in test coverage, potential bias in testing approaches, and the complexity of maintaining AI Testing models as applications evolve.

Q: How should organizations prepare for AI Testing adoption?

A: Organizations should start with pilot projects in low-risk areas, invest in training for existing staff, establish clear governance frameworks, and maintain human oversight of critical testing decisions.

Q: What skills should testers develop to remain relevant in an AI Testing world?

A: Focus on test strategy development, user experience testing, security testing, AI Testing system oversight, and domain expertise that requires human judgment and creativity.

Conclusion: Navigating the AI Testing Revolution Responsibly

The AI Testing revolution is no longer on the horizon—it's already here. But amid the excitement, it's crucial that we move beyond the hype and take a clear-eyed look at the risks and limitations.

AI Testing holds enormous promise: it can boost software quality, cut costs, and dramatically speed up development cycles. Yet it also brings serious challenges—job displacement, ethical dilemmas, and the looming threat of over-automation.

Moving forward, we need more than black-and-white thinking. It’s not about choosing between humans or machines, but about finding the right balance. We must harness the strengths of AI Testing while staying fully aware of its shortcomings. That means continuing to invest in people, even as we deploy intelligent systems. It also means building ethical frameworks to ensure AI enhances—not replaces—human judgment.

The future of software testing won’t be purely human or purely artificial. It will be a hybrid—one that combines the best of both worlds. Those who learn to navigate this balance will thrive. Those who don’t risk being left behind by a transformation that’s already in motion.

The real question isn’t whether AI Testing will reshape the industry—it already has. The question is whether we’ll shape that transformation thoughtfully and responsibly, or let it unfold without direction. The clock is ticking—and the decisions we make now will define the future of software testing.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!