Looking ahead to 2025, speech synthesis has emerged as one of the most transformative yet controversial technologies of our time. From Siri’s familiar voice guiding us through everyday tasks to deepfake audios that create political scandals, speech synthesis is reshaping the way we communicate, work, and even perceive reality. But are we really ready for the impact of machines that can speak with increasingly human-like accuracy?

The rapid development of speech synthesis technology has sparked heated debate across industries. Supporters cheer the democratization of voice content creation and improved accessibility, while critics warn that it could replace jobs, raise ethical dilemmas, and undermine real human communication. It’s critical that we make smart decisions about our digital future.

How Did Speech Synthesis Evolve From Robotic Voices to Human-Like Communication?

The Mechanical Beginnings

Speech Synthesis didn't begin in Silicon Valley boardrooms—it started with mechanical contraptions that would make today's smartphones seem like magic. The journey began in 1779 with Christian Kratzenstein's resonance tubes, followed by Wolfgang von Kempelen's "speaking machine" in 1791. These early attempts at AI Speech Synthesis were purely mechanical, producing sounds that barely resembled human speech but laid the groundwork for centuries of innovation.

The first electronic breakthrough came in 1939 with Bell Labs' VODER (Voice Operating Demonstrator), which required skilled operators to manually control speech parameters. Think of it as the world's first speech synthesizer—though it sounded more like a robot gargling water than actual human conversation.

The Digital Revolution Era

The 1960s marked a pivotal transition when Speech Synthesis AI began incorporating computer technology. MIT's work on formant synthesis and the development of linear predictive coding transformed how machines could generate speech. The 1970s brought us DECTalk, which became famous as Stephen Hawking's voice, demonstrating that Speech Synthesis Tools could serve profound humanitarian purposes.

However, the real game-changer came in the 1990s with concatenative synthesis. Companies like AT&T Bell Labs developed systems that recorded human speech segments and reassembled them to create new utterances. This approach significantly improved naturalness, though the resulting speech still had that distinctive "synthetic" quality we associate with early GPS systems and automated phone services.

The Neural Network Revolution

The transformation of Speech Synthesis accelerated dramatically with deep learning. Google's WaveNet, introduced in 2016, represented a quantum leap forward. By modeling audio waveforms directly using neural networks, WaveNet reduced the gap between synthetic and human speech quality by over 50%. Suddenly, AI Speech Synthesis began sounding genuinely human.

This breakthrough spawned a new generation of Speech Synthesis Tools: Amazon's Alexa became a household name, Apple's Siri evolved from novelty to necessity, and Google Assistant began handling complex conversational tasks. More recently, technologies like ElevenLabs, Murf, and Speechify have democratized high-quality voice synthesis, allowing anyone to create professional-sounding audio content.

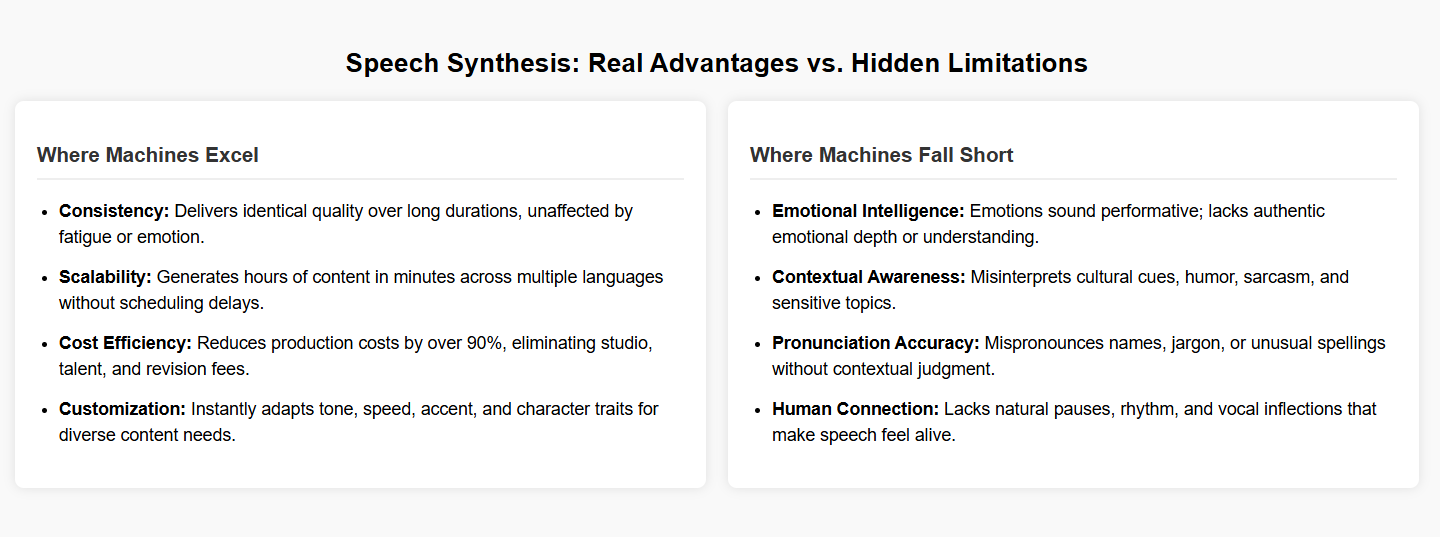

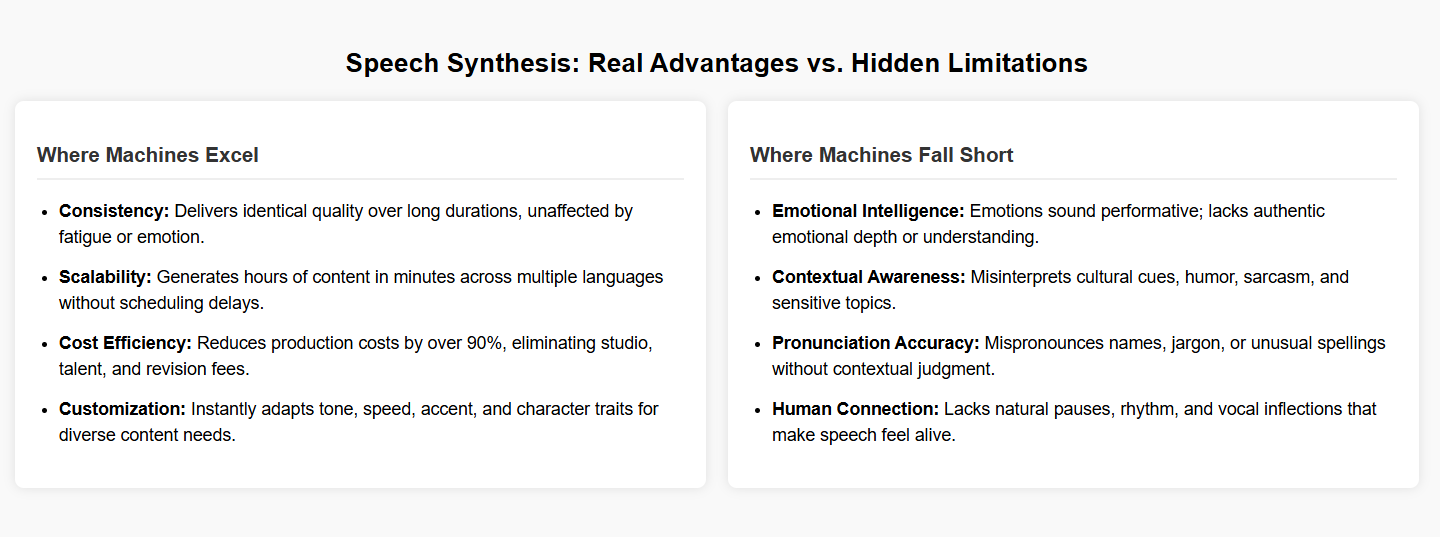

What Are the Real Advantages and Hidden Limitations of Speech Synthesis?

Where Machines Excel Beyond Human Capability

Speech Synthesis demonstrates clear superiority over human voices in several critical areas. First, consistency represents perhaps the most significant advantage. While human speakers experience fatigue, mood changes, and vocal strain, AI Speech Synthesis maintains identical quality across unlimited content duration. A human narrator might struggle to maintain energy during a 10-hour audiobook session, but Speech Synthesis Tools deliver the same vocal quality from the first word to the last.

Scalability offers another compelling advantage. Traditional voice work requires scheduling human talent, managing recording sessions, and handling revisions—processes that can take weeks. Speech Synthesis AI can generate hours of content in minutes, supporting multiple languages simultaneously. This capability has revolutionized content localization, allowing companies to expand globally without the traditional barriers of finding native speakers for every target market.

Cost efficiency transforms the economics of voice content entirely. Professional voice actors charge hundreds of dollars per finished hour, plus studio costs, editing time, and revision fees. Speech Synthesis reduces these expenses by over 90% while eliminating scheduling constraints and geographic limitations.

Customization represents another area where AI Speech Synthesis surpasses human limitations. Modern systems can adjust speaking pace, emotional tone, accent, and even age characteristics on demand. A single synthetic voice can portray multiple characters or adapt to different content types—something impossible for human speakers without extensive training and natural vocal range limitations.

The Uncanny Valley of Synthetic Speech

Despite remarkable advances, Speech Synthesis still struggles with nuanced human communication elements that we often take for granted. Emotional intelligence remains a significant limitation. While Speech Synthesis Tools can simulate happiness, sadness, or excitement, they lack genuine emotional understanding. The result often feels performative rather than authentic—like an actor reading lines without understanding the underlying context.

Contextual understanding presents another challenge. Human speakers naturally adjust their delivery based on subtle contextual cues, cultural references, and implied meanings. AI Speech Synthesis tends to treat all content equally, potentially delivering sensitive topics with inappropriate emotional coloring or failing to recognize when humor, sarcasm, or reverence is required.

Pronunciation accuracy, particularly with proper nouns, technical terminology, and non-standard spellings, continues plaguing even advanced systems. While humans can make educated guesses about unfamiliar words using contextual knowledge and linguistic intuition, Speech Synthesis AI often produces jarring mispronunciations that immediately break immersion.

The "breath of life" quality that makes human speech engaging remains elusive. Human speakers naturally vary their pacing, include subtle pauses for thought, and add micro-expressions through vocal inflection that create connection with listeners. Current Speech Synthesis technology, despite its sophistication, still lacks this organic quality that makes human communication compelling.

How Is Speech Synthesis Reshaping Industries and Threatening Traditional Jobs?

The Positive Transformation Wave

Speech Synthesis is creating unprecedented opportunities across multiple industries, fundamentally changing how businesses approach audio content creation. The accessibility sector has experienced perhaps the most profound positive impact. AI Speech Synthesis has democratized content consumption for visually impaired individuals, providing high-quality text-to-speech services that were previously expensive or unavailable. Educational institutions now offer course materials in audio format at minimal cost, expanding learning opportunities for students with different learning preferences and disabilities.

The entertainment industry has embraced Speech Synthesis Tools for rapid prototyping and multilingual content distribution. Streaming platforms can now offer dubbed content in dozens of languages simultaneously, dramatically expanding their global reach. Podcast creators use Speech Synthesis AI for intro/outro segments, maintaining brand consistency while reducing production costs.

E-learning platforms have revolutionized their content delivery through Speech Synthesis. Companies like Coursera and Udemy can convert written materials into engaging audio content, supporting different learning styles and enabling hands-free learning experiences. This transformation has made education more accessible to busy professionals who can now consume learning content during commutes or workout sessions.

Customer service operations have integrated Speech Synthesis to provide 24/7 multilingual support without the overhead of maintaining large call centers. Advanced systems can handle routine inquiries while seamlessly transferring complex issues to human agents, improving efficiency while reducing operational costs.

The Disruption of Traditional Voice Work

However, Speech Synthesis poses significant threats to traditional voice-dependent industries. The voice acting profession faces an existential crisis as AI Speech Synthesis technology improves. Entry-level voice work—including audiobook narration, commercial voice-overs, and instructional content—increasingly moves toward synthetic alternatives.

Radio broadcasting confronts similar challenges. Local radio stations experiment with AI-generated content and synthetic hosts, particularly for overnight shifts and automated programming. While major market personalities remain secure, smaller markets and specialized programming increasingly rely on Speech Synthesis Tools to reduce staffing costs.

The dubbing and localization industry experiences mixed impacts. While Speech Synthesis AI enables rapid content translation, it threatens traditional dubbing artists who specialized in voice matching and cultural adaptation. However, demand for human oversight and quality control has actually increased, as companies recognize that fully automated solutions often lack cultural nuance and emotional appropriateness.

Practical Solutions for Industry Adaptation

Industries facing Speech Synthesis disruption should focus on hybrid models that combine AI efficiency with human expertise. Voice actors can pivot toward becoming "voice directors" who train and refine AI Speech Synthesis models, ensuring quality and emotional appropriateness. This role transformation leverages their industry knowledge while embracing technological advancement.

Broadcasting professionals can specialize in content creation, interviewing, and live interaction—areas where human spontaneity and genuine connection remain irreplaceable. Speech Synthesis Tools can handle routine announcements and scripted segments while humans focus on authentic audience engagement.

Educational institutions should incorporate Speech Synthesis training into communications and media programs, preparing the next generation for a hybrid industry landscape where technical proficiency with AI Speech Synthesis becomes as important as traditional vocal skills.

What Ethical Dilemmas Does Speech Synthesis Present?

The Deepfake Audio Crisis

Speech Synthesis technology has unleashed a Pandora's box of ethical concerns that society is struggling to address. Voice cloning capabilities now enable the creation of convincing audio recordings of virtually anyone speaking words they never said. This presents unprecedented challenges for information integrity and personal privacy.

Political manipulation represents perhaps the most concerning application. AI Speech Synthesis can create fake campaign speeches, fabricated statements by public officials, or convincing recordings of private conversations.

Celebrity and public figure impersonation poses another significant threat. Speech Synthesis Tools can clone voices from publicly available audio, enabling unauthorized use of someone's vocal identity for commercial purposes, fraudulent schemes, or reputation damage. Legal frameworks struggle to keep pace with technological capabilities, leaving victims with limited recourse.

Consent and Ownership Complications

The question of voice ownership becomes increasingly complex as Speech Synthesis AI advances. When a company trains its models on voice actors' recordings, who owns the resulting synthetic voice? Current contracts often lack clarity about AI training rights, creating potential legal disputes as synthetic voices become more valuable commercial assets.

Deceased individuals' voices present particularly thorny ethical territory. Speech Synthesis technology can resurrect voices of departed family members, celebrities, or historical figures. While this might offer comfort or educational value, it raises questions about consent, dignity, and the rights of estates and survivors.

Children's voices require special protection considerations. AI Speech Synthesis models trained on minor's voices could potentially be misused for inappropriate content creation, necessitating stronger safeguards and parental consent frameworks.

Privacy and Security Vulnerabilities

Speech Synthesis technology creates new attack vectors for fraud and identity theft. Voice authentication systems—increasingly used by banks and secure services—become vulnerable when convincing synthetic voices can bypass security measures. Financial institutions report growing concerns about voice spoofing attacks using Speech Synthesis Tools.

Workplace surveillance and manipulation present additional privacy concerns. Employers might use Speech Synthesis AI to create fake recordings of employee conversations or manipulate audio evidence in disciplinary proceedings. The technology's accessibility makes such abuse feasible for small organizations without sophisticated technical resources.

Establishing Ethical Boundaries

Addressing these challenges requires comprehensive approaches combining technical safeguards, legal frameworks, and industry standards. Watermarking and detection technologies should be mandatory for commercial Speech Synthesis applications, enabling identification of synthetic content. Companies developing AI Speech Synthesis tools bear responsibility for implementing usage monitoring and preventing malicious applications.

Legal systems need updated frameworks addressing voice rights, synthetic content disclosure requirements, and penalties for malicious use. Just as we have copyright laws for written and visual content, voice rights require similar protection with clear guidelines for authorized use, fair compensation, and consent requirements.

Industry self-regulation through professional associations and ethical guidelines can establish best practices before regulatory bodies catch up. Speech Synthesis developers should implement built-in restrictions preventing unauthorized voice cloning and require explicit consent for voice model training.

How Can We Responsibly Harness Speech Synthesis Technology?

Creating Hybrid Solutions for Affected Industries

The key to responsible Speech Synthesis adoption lies in developing hybrid models that amplify human capabilities rather than simply replacing them. Voice professionals should embrace AI Speech Synthesis as a powerful tool that extends their reach and efficiency while maintaining the irreplaceable human elements that audiences value.

For voice actors facing industry disruption, the solution involves evolution rather than resistance. Professional voice talent can offer comprehensive services combining their expertise with Speech Synthesis Tools. This might include voice model creation and training, quality assurance for synthetic output, emotional direction for AI-generated content, and specialized human performance for high-value projects requiring authentic connection.

Broadcasting organizations can implement tiered approaches where Speech Synthesis AI handles routine content while human presenters focus on live interaction, breaking news, and community engagement. Local radio stations might use synthetic voices for overnight programming and weather updates while preserving human hosts for drive-time shows and community events.

Educational content creators can leverage Speech Synthesis for scalability while maintaining human oversight for quality and cultural appropriateness. This approach enables rapid content localization and accessibility improvements without sacrificing educational effectiveness.

Implementing Ethical Safeguards

Responsible Speech Synthesis deployment requires robust consent mechanisms and transparent usage policies. Organizations should establish clear protocols requiring explicit permission before training AI models on individual voices. This includes comprehensive disclosure about intended uses, duration of rights, and compensation frameworks.

Verification and detection systems should be integrated into Speech Synthesis Tools from the ground up. Rather than treating synthetic content identification as an afterthought, developers should implement watermarking, metadata tracking, and detection APIs that allow platforms to identify and label AI-generated audio automatically.

Professional certification programs for AI Speech Synthesis practitioners can establish competency standards and ethical guidelines. Similar to other professional fields, certified practitioners would commit to ethical usage standards, continuing education requirements, and accountability measures.

Building Technology with Human Values

The future of Speech Synthesis should prioritize augmentation over replacement. Instead of creating technology that eliminates human involvement, we should develop AI Speech Synthesis systems that enhance human creativity, efficiency, and accessibility while preserving the authentic connections that make communication meaningful.

Transparency represents a cornerstone of responsible implementation. All Speech Synthesis applications should clearly disclose when synthetic voices are being used, allowing audiences to make informed decisions about their consumption preferences. This transparency builds trust and enables healthy coexistence between human and synthetic content.

Collaborative development involving voice professionals, ethicists, legal experts, and community representatives can ensure that Speech Synthesis AI evolution considers diverse perspectives and societal impacts. Technology developed in isolation often creates unintended consequences that could be prevented through inclusive design processes.

Investment in complementary human skills should accompany Speech Synthesis adoption. Organizations implementing AI voice technology should simultaneously invest in training programs that help affected workers develop skills for hybrid roles, creative applications, and emerging opportunities that leverage human-AI collaboration.

FAQs

Q: Can Speech Synthesis completely replace human voice actors?

A: While Speech Synthesis technology has advanced dramatically, it cannot fully replace human voice actors in all contexts. AI Speech Synthesis excels at routine, high-volume content production but lacks the emotional intelligence, cultural understanding, and authentic connection that human performers provide. The future likely involves hybrid models where Speech Synthesis Tools handle scalable content while humans focus on creative, emotionally nuanced, and culturally sensitive work.

Q: How can I tell if audio content uses Speech Synthesis?

A: Detecting Speech Synthesis AI requires attention to several indicators: unnatural breathing patterns, consistent vocal quality without variation, awkward pronunciation of proper nouns, and lack of genuine emotional inflection. However, as technology improves, detection becomes increasingly difficult. Professional detection tools and mandatory disclosure practices represent more reliable identification methods than human perception alone.

Q: What legal protections exist for voice rights?

A: Current legal frameworks for voice rights remain limited and vary by jurisdiction. Some regions treat voice as intellectual property similar to likeness rights, while others provide minimal protection. The rapid advancement of Speech Synthesis technology has outpaced legal development, creating gaps in protection. Advocacy for comprehensive voice rights legislation and industry self-regulation represents the current path toward better protections.

Q: How accurate is modern Speech Synthesis compared to human speech?

A: Modern AI Speech Synthesis achieves remarkable accuracy in controlled conditions, often indistinguishable from human speech in short segments. However, challenges remain with contextual understanding, emotional appropriateness, and maintaining naturalness across extended content. Quality varies significantly between premium Speech Synthesis Tools and basic applications, with the best systems approaching human-level quality for scripted content.

Q: What industries benefit most from Speech Synthesis adoption?

A: Speech Synthesis provides the greatest benefits to industries requiring scalable audio content production: e-learning, accessibility services, customer support, content localization, and digital publishing. These sectors gain significant efficiency improvements and cost reductions while serving broader audiences. Industries requiring authentic human connection and spontaneous interaction see more limited benefits from current technology.

Conclusion

Speech Synthesis stands at the intersection of remarkable technological achievement and profound societal challenge. As we've explored throughout this analysis, the technology offers unprecedented opportunities for accessibility, efficiency, and global communication while simultaneously threatening traditional employment models and raising serious ethical concerns about authenticity and consent.

The evolution from mechanical speaking machines to neural network-powered AI Speech Synthesis represents one of the most rapid technological transformations in recent history. Today's Speech Synthesis Tools can create voice content that rivals human quality while offering scalability and customization impossible through traditional means. Yet this same capability enables deepfake audio creation and threatens the livelihoods of voice professionals worldwide.

Our path forward requires thoughtful navigation between innovation and responsibility. Rather than allowing Speech Synthesis AI to develop unchecked or rejecting it entirely, we must actively shape its evolution to serve human flourishing. This means implementing robust ethical frameworks, supporting affected workers through transition programs, and maintaining transparency about synthetic content usage.

The industries most successful in adopting Speech Synthesis will be those that view it as a tool for human augmentation rather than replacement. By combining AI efficiency with human creativity and emotional intelligence, we can harness the technology's benefits while preserving the authentic connections that make communication meaningful.

As we move forward, the responsibility lies with all stakeholders—developers, users, policymakers, and society at large—to ensure that Speech Synthesis technology serves humanity's best interests. The choices we make today about ethical implementation, legal frameworks, and industry standards will determine whether this powerful technology becomes a force for democratization and accessibility or a source of division and mistrust.

The conversation about Speech Synthesis is far from over. As the technology continues evolving at breakneck speed, our collective vigilance, creativity, and commitment to human values will determine whether we master this tool or become mastered by it.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!