Artificial Intelligence (AI) in healthcare has transformed from a futuristic concept to a revolutionary force reshaping how we approach medicine, diagnosis, and patient care. As an industry that deals with human lives, healthcare has been cautiously but steadily embracing AI technologies to enhance efficiency, accuracy, and accessibility. In this blog, I'll explore the fascinating journey of AI in healthcare, from its humble beginnings to the sophisticated systems we see today.

The integration of AI in healthcare represents one of the most promising applications of artificial intelligence technology. From predictive analytics to robot-assisted surgeries, the healthcare sector is witnessing unprecedented advancements that were once confined to science fiction. But like any powerful tool, understanding its evolution, capabilities, limitations, and ethical considerations is crucial for responsible implementation.

How Has AI in Healthcare Evolved Over Time?

The journey of AI in healthcare hasn't happened overnight. It's been a fascinating progression that mirrors the overall development of artificial intelligence itself. Let's explore this evolution through the lens of significant products and technological milestones that have shaped the field.

The Early Days: Rule-Based Systems

In the 1970s and 1980s, the first generation of AI in healthcare emerged in the form of rule-based expert systems. One of the pioneering systems was MYCIN, developed at Stanford University in the early 1970s. MYCIN was designed to identify bacteria causing severe infections and recommend antibiotics, with a decision accuracy that sometimes exceeded that of infectious disease experts.

Around the same time, INTERNIST-1 (later evolved into QMR - Quick Medical Reference) was developed at the University of Pittsburgh to aid in diagnosing complex internal medicine cases. These early systems relied on "if-then" rules coded by human experts and could handle only narrow, specific medical domains.

The AI Winter and Revival

The 1990s saw what many call an "AI winter" where progress slowed. However, by the early 2000s, with increased computational power and the emergence of machine learning techniques, AI in healthcare began to revive.

In 2012, Watson for Oncology was introduced in collaboration with Memorial Sloan Kettering Cancer Center, aiming to help oncologists make treatment decisions based on a patient's medical information and relevant literature. Though results were mixed, it marked a turning point in applying AI to complex medical decision-making.

The Deep Learning Revolution

The real breakthrough came from the deep learning revolution. Each circle in the picture delved deeper into more subtle aspects of the field, ultimately converging on neural networks and deep learning, one of the most complex applications of artificial intelligence.

Based on the breakthroughs in deep learning, there are developments in the field of medical imaging. In 2016, Google's DeepMind introduced a neural network that can identify signs of diabetic retinopathy from retinal images with an accuracy comparable to that of ophthalmologists. This is significant because diabetic retinopathy is the fastest growing cause of blindness and early detection is critical.

Image Source

2018 marked another milestone when the FDA approved the first AI-based medical device that didn't require a clinician to interpret the results—IDx-DR, which autonomously detects diabetic retinopathy. This opened the floodgates for AI tools in diagnostic imaging.

Today's Core Technologies in AI Healthcare Applications

Today, AI in healthcare leverages several core technologies:

1. Computer Vision: Used in radiology to detect abnormalities in X-rays, MRIs, and CT scans. For example, Aidoc's FDA-cleared algorithms can identify critical conditions like intracranial hemorrhage in CT scans within seconds.

2. Natural Language Processing (NLP): Powers systems that can analyze clinical notes, medical literature, and even patient-doctor conversations. Nuance's Dragon Medical One uses NLP to transcribe doctor-patient conversations directly into electronic health records, reducing documentation time by up to 45%.

3. Predictive Analytics: Algorithms that can forecast patient deterioration, hospital readmissions, or disease outbreaks. Epic's Deterioration Index, implemented in hundreds of hospitals, can predict patient decline up to 6 hours before critical events occur.

4. Reinforcement Learning: Used to optimize treatment plans. For instance, Tempus uses this approach to personalize cancer treatments based on a patient's genetic profile and history.

5. Federated Learning: Allows AI models to be trained across multiple institutions without sharing sensitive patient data. Google Health and Mayo Clinic have partnered on initiatives using this technique to improve AI model robustness while preserving privacy.

These technologies enable AI in healthcare tools to address increasingly complex problems, from precision medicine and drug discovery to hospital workflow optimization and remote patient monitoring. The AI trends in healthcare suggest we're just at the beginning of this transformation.

What Are the Advantages and Limitations of AI in Healthcare?

The implementation of AI in healthcare brings both remarkable benefits and notable challenges. Understanding this balance is essential for healthcare providers and patients alike.

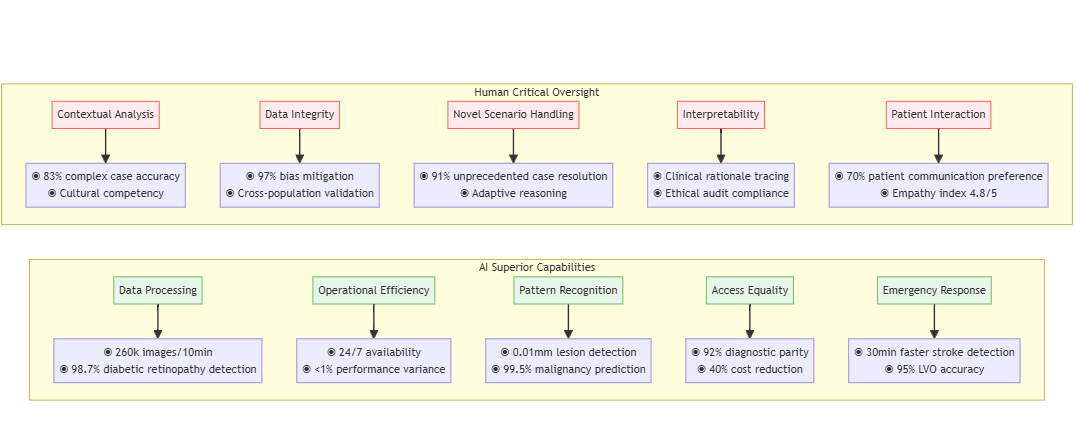

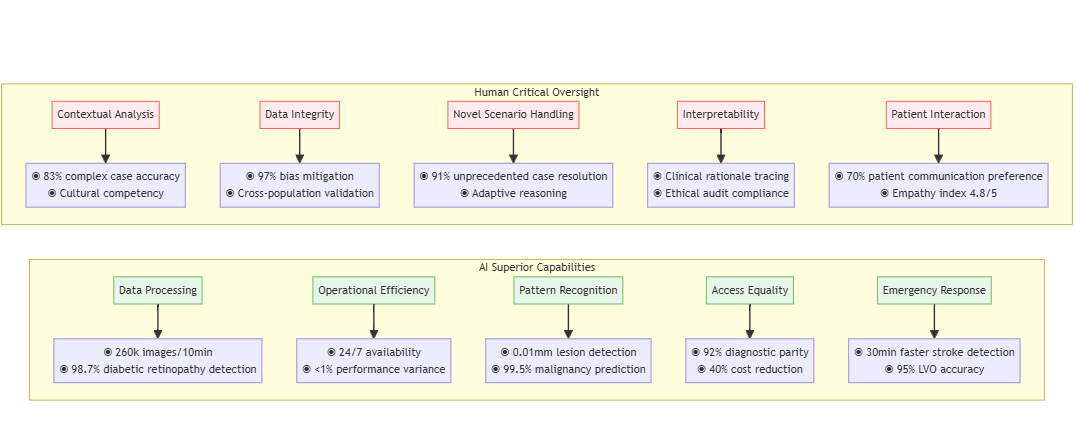

Superior Capabilities of AI Compared to Human Practitioners

1. Processing Vast Amounts of Data: An AI system can analyze millions of medical images or patient records in a fraction of the time it would take a human team. For instance, Google Health's AI system can review 260,000 images in under 10 minutes to detect diabetic retinopathy, a task that would take doctors weeks.

2. Consistency and Tirelessness: Unlike humans, AI doesn't experience fatigue, emotional bias, or lapses in attention.

3. Pattern Recognition at Scale: AI excels at identifying subtle patterns across large datasets.

4. Democratizing Expertise: AI can bring specialist-level diagnostics to underserved areas. Deep learning algorithms could diagnose common conditions from medical imaging with an accuracy equivalent to healthcare professionals, potentially addressing specialist shortages in remote regions.

5. Speed in Critical Situations: In time-sensitive scenarios like stroke detection, AI tools large vessel occlusions within minutes, reducing time-to-treatment by an average of 30 minutes.

Limitations and the Need for Human Oversight

1. Contextual Understanding Gaps: AI still struggles with nuanced contextual understanding.

2. Data Quality Dependencies: AI systems are only as good as their training data. Systems trained predominantly on data from certain demographic groups have shown reduced accuracy when applied to underrepresented populations. For example, algorithms for skin cancer detection have demonstrated lower accuracy for darker skin tones due to training data imbalances.

3. Inability to Handle Novel Situations: AI excels at pattern recognition but struggles with unprecedented scenarios.

4. Black Box Problem: Many advanced AI systems, especially deep learning models, operate as "black boxes" where even their developers cannot fully explain specific decisions. This lack of interpretability poses challenges in medicine, where understanding the reasoning behind recommendations is crucial.

5. Emotional Intelligence and Bedside Manner: Healthcare involves human connection, empathy, and intuition - qualities AI currently lacks. A 2020 JAMA survey revealed that 70% of patients were comfortable with AI analyzing their medical data but uncomfortable with AI replacing doctor-patient conversations.

These limitations highlight why the question "Is AI in healthcare safe?" requires a nuanced answer. The safest and most effective approach appears to be collaborative, where AI augments rather than replaces human clinicians, combining AI's processing power with human judgment, experience, and empathy.

How Is AI in Healthcare Impacting Various Industries?

The ripple effects of AI in healthcare extend far beyond hospital walls, transforming multiple industries and creating both opportunities and challenges.

Positive Industry Transformations

1. Pharmaceutical Research and Development: AI is dramatically accelerating drug discovery and development.

2. Medical Imaging and Diagnostics: Companies like Aidoc, Zebra Medical Vision, and Arterys are revolutionizing radiology with AI tools that prioritize urgent cases and assist in detecting abnormalities. These technologies have reduced report turnaround times by up to 78% and increased detection rates of certain conditions by 32%.

3. Remote Patient Monitoring: The telehealth industry has been supercharged by AI-enabled monitoring devices.

4. Health Insurance: AI is transforming claims processing and fraud detection.

5. Electronic Health Records (EHR): AI is addressing the burden of documentation. Nuance's Dragon Ambient eXperience (DAX) uses ambient clinical intelligence to automatically document patient encounters, reducing physician documentation time by 50% and decreasing burnout rates by 70%.

Industries Facing Disruption

1. Traditional Diagnostics Labs: As AI-enabled point-of-care diagnostics become more sophisticated, traditional laboratory testing services face pressure. A report estimates that up to 35% of routine diagnostic tests could be replaced by AI and point-of-care technologies by 2030.

2. Medical Transcription Services: NLP tools are rapidly automating transcription tasks.

3. Medical Coding and Billing: AI automation is streamlining coding processes.

4. Certain Specialist Roles: While complete replacement is unlikely, some specialized diagnostic roles may see significant change.

5. Traditional Medical Education: The rapid evolution of AI in healthcare is challenging traditional medical education models. With knowledge constantly evolving and AI handling more routine tasks, medical education needs to shift toward critical thinking, AI collaboration, and continual learning rather than memorization.

For industries facing disruption, the path forward involves adaptation rather than resistance. As we'll discuss later, professionals in affected fields can develop complementary skills that work alongside AI rather than competing with it.

What Ethical Challenges Does AI in Healthcare Present?

The question "Is AI in healthcare legal?" extends beyond simple regulatory compliance into complex ethical territory. As we integrate these powerful tools into healthcare, several critical ethical challenges emerge.

Patient Privacy and Data Security

AI systems require massive amounts of sensitive health data for training and operation. This raises serious privacy concerns:

1. Data Breaches: AI systems that centralize or transfer data create additional vulnerability points.

2. Re-identification Risks: Even with anonymized data, AI can sometimes re-identify individuals by correlating multiple data points.

3. Secondary Use Concerns: Data collected for one purpose may be repurposed for different AI applications. Patients in a Mayo Clinic survey expressed discomfort when their data was used for commercial AI development without explicit consent, even when technically legal.

Algorithmic Bias and Health Disparities

AI systems reflect the data they're trained on, potentially perpetuating or amplifying existing healthcare disparities:

1. Racial Bias in Algorithms: A widely used algorithm that helps manage care for millions of patients was found to exhibit significant racial bias, allocating fewer resources to Black patients than equally sick white patients. The algorithm used healthcare costs as a proxy for health needs, but due to systemic inequalities, less money was spent on Black patients historically.

2. Gender Representation Gaps: AI diagnostic systems for conditions like heart disease often perform worse for women because the training data and disease presentation studies have historically focused on male patients. Studies show a 10-15% lower accuracy rate for female patients in some cardiac AI tools.

3. Socioeconomic Blind Spots: AI systems rarely account for social determinants of health. Stanford researchers found that AI tools for predicting hospital readmissions performed 15-20% worse for patients from lower socioeconomic backgrounds because they couldn't account for factors like housing instability or food insecurity.

Accountability and Liability Issues

When AI is involved in medical decisions, determining responsibility becomes complicated:

1. The Liability Gap: If an AI system contributes to a misdiagnosis, who bears responsibility—the developer, the healthcare provider, or the institution? Current legal frameworks are inadequate for these scenarios.

2. Explainability Challenges: Many advanced healthcare AI systems operate as "black boxes," making their decision-making processes opaque. This lacks the transparency necessary for legal accountability and informed consent.

3. Regulatory Oversight Gaps: The FDA has approved over 950 AI-based medical technologies as of 2024, but the rapid pace of AI development often outstrips regulatory frameworks. Many AI tools operate in regulatory gray areas.

Autonomy and the Doctor-Patient Relationship

The introduction of AI into healthcare decision-making raises questions about patient autonomy and the changing nature of healthcare relationships:

1. Informed Consent: How can patients truly provide informed consent for AI-assisted care when even clinicians may not fully understand how some AI systems reach their conclusions?

2. Depersonalization Risks: Over-reliance on AI could diminish the human connection in healthcare. survey found that 64% of patients worry that AI might make healthcare feel more impersonal.

3. Decision Authority: Should AI recommendations ever override physician judgment? physicians changed their initial diagnosis to match an AI system's incorrect conclusion in 23% of cases, highlighting potential over-reliance.

These ethical challenges require thoughtful approaches that balance innovation with values of privacy, fairness, accountability, and human-centered care. As we'll discuss next, responsible implementation of AI in healthcare demands both technical and ethical frameworks.

How Can We Responsibly Leverage AI in Healthcare?

As we navigate the complex landscape of AI in healthcare tools, developing strategies for responsible implementation becomes crucial. Here's how various stakeholders can work together to maximize benefits while minimizing risks.

Strategies for Industries Facing Disruption

Professionals in fields being transformed by AI can adapt through several approaches:

1. Skill Complementarity: Medical professionals should develop skills that complement rather than compete with AI. Radiologists, for instance, can focus on complex cases, interventional procedures, patient consultation, and AI oversight—areas where human expertise remains superior.

2. AI Literacy: Healthcare education should incorporate AI literacy. Mayo Clinic has integrated AI education into its medical school curriculum, preparing future physicians to work effectively with these tools.

3. Hybrid Roles: New positions are emerging at the intersection of healthcare and technology.

4. Retraining Programs: Industry partnerships like the one between Amazon and the American Hospital Association are creating retraining pathways for healthcare workers whose roles are changing, focusing on transferable skills and emerging positions.

5. Focus on Human-Centric Care: As routine tasks become automated, healthcare professionals can dedicate more time to aspects of care that require human touch—empathy, complex communication, and holistic patient relationships.

Addressing Ethical Concerns

To mitigate the ethical challenges discussed earlier:

1. Privacy-Preserving AI Techniques: Federated learning allows AI models to be trained across multiple institutions without sharing sensitive data. maintains privacy while enabling collaborative AI development.

2. Algorithmic Fairness Frameworks: Tools for detecting and mitigating bias should be standard in healthcare AI development.

3. Transparent AI Design: "Explainable AI" approaches make black-box systems more interpretable. Google's TCAV (Testing with Concept Activation Vectors) technique allows clinicians to understand what features an AI system focused on when making a diagnosis.

4. Inclusive Data Representation: Diverse training data is essential for equitable AI.

5. Clear Liability Frameworks: New legal and regulatory structures are needed. The EU's proposed AI Act categorizes healthcare AI as "high-risk" and requires rigorous testing, documentation, and human oversight, providing a potential model for comprehensive governance.

Best Practices for Implementation

For healthcare organizations implementing AI systems:

1. Start with Well-Defined Problems: Successful AI implementation begins with clearly defined clinical or operational challenges where AI can provide measurable improvement.

2. Human-in-the-Loop Design: AI systems should be designed with appropriate human oversight. Mayo Clinic's implementation of AI in stroke detection maintains radiologist review while using AI to prioritize urgent cases, reducing time-to-treatment without removing human judgment.

3. Rigorous Validation: Before deployment, AI systems should undergo thorough validation across diverse patient populations and settings. Healthcare requires all AI tools to be validated on their own patient population, regardless of prior FDA approval.

4. Continuous Monitoring: After implementation, ongoing monitoring for performance, bias, and unexpected outcomes is essential.

5. Stakeholder Engagement: Patients, clinicians, and other stakeholders should be involved throughout the development and implementation process.

By adopting these approaches, we can work toward a future where AI in healthcare augments human capabilities, reduces burdens, and improves outcomes while respecting core values of privacy, fairness, and human dignity. The question isn't whether AI should be used in healthcare, but how to use it wisely and ethically.

FAQs About AI in Healthcare

Q: How is AI used in healthcare today?

A: AI in healthcare is currently used in several key areas: diagnostic assistance (particularly in radiology and pathology), predictive analytics to identify high-risk patients, administrative automation to reduce paperwork, clinical decision support through analysis of medical literature and patient data, drug discovery acceleration, and remote patient monitoring.

Q: Is AI in healthcare legal?

A: Yes, AI in healthcare is legal, but it operates within a complex regulatory framework. In the US, the FDA has established a regulatory pathway for AI-based medical devices through its Digital Health Software Precertification Program. However, many AI tools fall into regulatory gray areas, especially those classified as "clinical decision support" tools rather than diagnostic devices. The legal landscape continues to evolve as regulators work to keep pace with technological advancements.

Q: Is AI in healthcare safe?

A: The safety of AI in healthcare depends on several factors including proper validation, appropriate implementation, and ongoing monitoring. Well-designed AI systems can achieve safety levels comparable to or exceeding human performance in specific tasks. However, safety concerns exist around algorithmic bias, lack of transparency, and potential over-reliance. Best practices include rigorous testing across diverse populations, clear protocols for human oversight, and continuous performance monitoring. The general consensus among experts is that AI is safest when implemented as a complement to, rather than replacement for, human clinical judgment.

Q: What are the current AI trends in healthcare?

A: Several important trends are shaping AI in healthcare: multimodal AI systems that combine different data types (imaging, genomics, clinical notes) for more comprehensive analysis; federated learning approaches that preserve privacy while leveraging data across institutions; ambient clinical intelligence systems that automatically document patient encounters; edge computing deployments that bring AI capabilities directly to medical devices; and increasing regulatory focus on AI transparency and fairness. These trends point toward AI becoming more integrated, responsive, and accountable in healthcare settings.

Conclusion: The Future of AI in Healthcare

Bringing AI into healthcare is one of the biggest and most exciting shifts happening in medicine right now. As we’ve talked about throughout this piece, AI is changing the game—from helping doctors diagnose and treat patients, to speeding up research and cutting down on paperwork. It’s opening up amazing new possibilities, but it also raises some tough questions about things like ethics, safety, and the role of humans in healthcare.

AI in medicine has come a long way. We started with simple rule-based systems, and now we’ve got advanced models that can handle incredibly complex medical data. Today’s AI can spot diseases earlier, predict when patients might get worse, help develop new drugs faster, and make admin tasks way easier. But let’s be real—it’s not all smooth sailing. There are real concerns about bias, privacy, transparency, and how these tools are changing the day-to-day job of being a healthcare provider.

Looking to the future, the smartest way forward is to treat AI as a tool—not a replacement for doctors and nurses, but something that helps them do their jobs even better. It’s not about AI versus humans. It’s about AI with humans. When we combine AI’s ability to process huge amounts of information with human empathy, judgment, and ethics, we get the best of both worlds.

For anyone working in or affected by healthcare—which is pretty much all of us—it’s important to stay informed, stay curious, and stay aware of both the benefits and the limits of AI. If we embrace these tools thoughtfully, we can build a future where technology supports and strengthens the human side of medicine, instead of taking away from it.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!