In the rapidly evolving landscape of artificial intelligence, AI agent tools have emerged as game-changers across industries. These intelligent systems are revolutionizing how we approach complex tasks, automating processes, and enhancing decision-making capabilities. As AI agent tools continue to advance, understanding their evolution, capabilities, and implications becomes crucial for professionals seeking to harness their potential. This blog post delves into the transformative journey of AI agent tools, exploring their development trajectory, strengths, limitations, and the ethical considerations they raise.

The significance of AI agent tools in today's technological ecosystem cannot be overstated. According to recent market research, the global AI agent tools market is projected to grow from $5.1 billion in 2024 to $47.1 billion by 2030, at a compound annual growth rate (CAGR) of 44.8%. This remarkable growth trajectory underscores the increasing adoption and integration of AI agent tools across diverse sectors. As we navigate through the complexities of these intelligent systems, we'll examine the latest AI agent tool trends and address common concerns including whether AI agent tools are legal and safe for widespread deployment.

How Have AI Agent Tools Evolved Over Time?

The journey of AI agent tools has been nothing short of remarkable, transforming from simple rule-based systems to sophisticated autonomous entities capable of complex reasoning and decision-making. Let's trace this fascinating evolution through the lens of influential products and technological breakthroughs.

Early Foundations: Rule-Based Systems

The earliest iterations of what we might now recognize as AI agent tools emerged in the form of rule-based expert systems.

These pioneering systems:

- Operated on predefined if-then rules

- Required explicit programming for every scenario

- Lacked learning capabilities

- Could only handle narrow, specific domains

While primitive by today's standards, these early AI agent tools laid crucial groundwork by demonstrating how machines could simulate human expertise within constrained environments.

The Machine Learning Revolution

The 1990s and early 2000s witnessed a pivotal shift as AI agent tools began incorporating machine learning capabilities:

IBM's Deep Blue defeated chess champion Garry Kasparov in 1997, showcasing how AI agent tools could master complex strategic games through computational power and specialized algorithms.

Apple's introduction of Siri in 2011 marked another watershed moment, bringing AI agent tools into mainstream consumer technology. Siri represented a new generation of virtual assistants capable of natural language processing and basic contextual understanding.

Google's acquisition and development of DeepMind technologies fundamentally transformed the landscape. AlphaGo's defeat of world champion Lee Sedol in 2016 demonstrated how deep reinforcement learning enabled AI agent tools to master tasks requiring intuition and creative problem-solving.

The Modern Era: Autonomous and General-Purpose Agents

Today's AI agent tools have evolved dramatically in sophistication and capability:

OpenAI's GPT (Generative Pre-trained Transformer) models exemplify the remarkable progress in natural language processing. These AI agent tools can generate human-like text, translate languages, answer questions, summarize content, and even write code with impressive accuracy.

Google's LaMDA and subsequent models have pushed conversational AI agent tools to new heights, creating more natural and contextually aware interactions.

The emergence of multimodal AI agent tools like DALL-E, Midjourney, and Claude represents another evolutionary leap, with systems capable of understanding and generating both text and images, demonstrating cross-domain intelligence.

Core Technologies Powering Modern AI Agent Tools

Today's sophisticated AI agent tools leverage several key technologies:

1. Transformer architectures: These attention-based neural networks have revolutionized natural language processing, enabling AI agent tools to capture long-range dependencies and contextual relationships in text.

2. Reinforcement Learning from Human Feedback (RLHF): This technique has dramatically improved the alignment of AI agent tools with human preferences and ethical considerations.

3. Multimodal learning: Modern AI agent tools can process multiple types of input (text, images, audio) simultaneously, enabling more comprehensive understanding and generation capabilities.

4. Chain-of-thought reasoning: Advanced AI agent tools can now break down complex problems into steps, demonstrating more sophisticated reasoning abilities.

The evolution of AI agent tools reflects a journey from rigid, narrow systems to increasingly flexible, autonomous agents capable of tackling diverse and complex tasks with minimal human intervention. Current AI agent tool trends point toward even greater capabilities in reasoning, multimodal understanding, and autonomous decision-making.

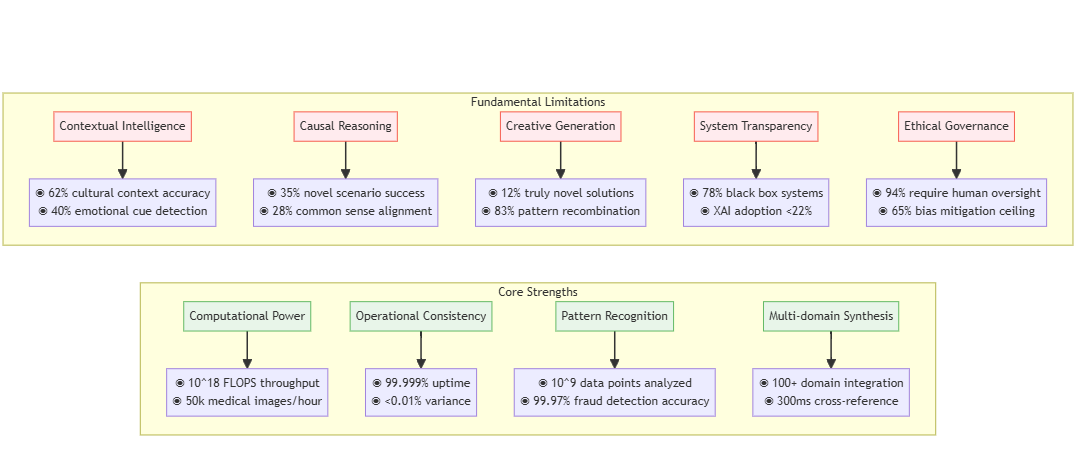

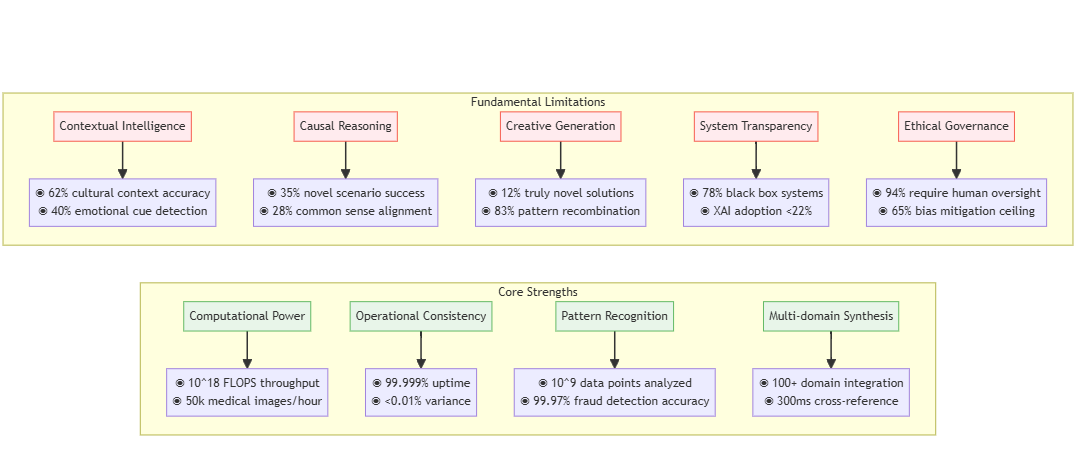

What Are the Strengths and Limitations of AI Agent Tools?

Understanding both the strengths and limitations of AI agent tools is crucial for leveraging them effectively. Let's examine where these technologies excel and where they still fall short.

Comparative Advantages Over Human Capabilities

AI agent tools demonstrate several distinct advantages over human performance:

Processing Power and Speed

AI agent tools can analyze vast datasets and perform complex calculations at speeds impossible for humans. For instance, modern AI diagnostic systems can review thousands of medical images in hours rather than the weeks it would take human radiologists.

This advantage stems from the computational architecture underlying AI agent tools, which allows for parallel processing and optimization specifically designed for data-intensive tasks.

Consistency and Tirelessness

Unlike humans, AI agent tools don't experience fatigue, emotional fluctuations, or attention lapses. This makes them particularly valuable in scenarios requiring:

- Continuous monitoring (security systems, industrial processes)

- Repetitive tasks requiring precision (quality control, data entry)

- Consistent application of protocols (regulatory compliance, standardized assessments)

This consistency results in error reduction and improved reliability in many operational contexts.

Pattern Recognition at Scale

Modern AI agent tools excel at identifying subtle patterns across massive datasets—capability that extends beyond human cognitive limitations. This ability has profound implications in:

- Fraud detection in financial services

- Genomic analysis for personalized medicine

- Consumer behavior prediction for marketing optimization

For example, AI agent tools deployed in cybersecurity can identify attack patterns by analyzing network traffic data at a scale no human team could match.

Multi-domain Knowledge Integration

Advanced AI agent tools can synthesize information across disparate domains, referencing vast knowledge bases instantaneously. This enables them to make connections and generate insights that might elude even specialists constrained by their specific expertise.

Critical Limitations and Challenges

Despite their impressive capabilities, AI agent tools face significant limitations:

Understanding Context and Nuance

While AI agent tools have made remarkable strides in language understanding, they still struggle with:

- Cultural contexts and regional idioms

- Subtle emotional cues

- Implicit knowledge humans take for granted

- Situational appropriateness

These limitations stem from the fundamental architecture of these systems, which lack the embodied experience and social embedding that shapes human understanding.

Causal Reasoning and Common Sense

AI agent tools often demonstrate what researchers call "brittle intelligence"—performing impressively within domains they're trained on but failing when confronted with scenarios requiring causal reasoning or common sense.

For example, while an AI might draft a sophisticated business strategy document, it might simultaneously make suggestions that reveal a fundamental misunderstanding of basic physical realities or human motivations.

Creative Problem-Solving

Despite advances in generative capabilities, AI agent tools typically excel at recombining and extrapolating from existing patterns rather than generating truly novel solutions to unprecedented problems. They lack the experiential basis and intrinsic motivation that drives human creativity.

Explainability and Transparency

Many advanced AI agent tools, particularly those based on deep learning, function as "black boxes" where the reasoning process remains opaque even to their developers. This limitation:

- Creates challenges for regulatory compliance

- Reduces trust in high-stakes applications

- Complicates error diagnosis and correction

The growing field of explainable AI (XAI) seeks to address this limitation, but significant challenges remain.

Need for Human Oversight

Perhaps the most significant limitation of current AI agent tools is their continued dependence on human oversight and intervention, particularly for:

- Setting appropriate goals and constraints

- Verifying outputs for critical applications

- Resolving edge cases and handling exceptions

- Ensuring ethical alignment and preventing harmful outcomes

This human-in-the-loop necessity stems from the fundamental limitations in autonomous judgment and ethical reasoning that characterize even the most advanced AI agent tools.

Understanding these strengths and limitations is essential for responsible deployment of AI agent tools across different contexts. The question "is AI agent tools safe?" must be answered within this nuanced framework of capabilities and constraints.

How Are AI Agent Tools Transforming Industries?

The integration of AI agent tools is reshaping industries in profound ways, creating both opportunities and challenges. Let's examine the multifaceted impact across key sectors.

Healthcare Revolution

AI agent tools are fundamentally transforming healthcare delivery and research:

Positive Impacts

- Diagnostic precision: AI diagnostic systems can analyze medical images with accuracy rivaling or exceeding human specialists.

- Drug discovery acceleration: AI agent tools have dramatically compressed pharmaceutical development timelines.

- Personalized treatment planning: Advanced AI agent tools can analyze patient data to recommend individualized treatment protocols, improving outcomes while reducing adverse effects.

Challenges and Disruptions

- Workflow integration issues: Many healthcare institutions struggle to effectively integrate AI agent tools into existing clinical workflows, creating friction and adoption barriers.

- Potential job displacement: While complete replacement of medical professionals is unlikely, certain specialties focused on pattern recognition (like radiology) may see significant role adjustments.

- Data privacy concerns: The effectiveness of healthcare AI agent tools depends on access to sensitive patient data, raising significant privacy and security challenges.

Financial Services Transformation

The financial sector has been an early and enthusiastic adopter of AI agent tools:

Positive Impacts

- Fraud detection enhancement: Advanced AI agent tools can identify fraudulent transactions with unprecedented accuracy.

- Risk assessment precision: Lenders using AI agent tools can develop more nuanced credit risk models that consider non-traditional factors, potentially expanding financial inclusion while maintaining appropriate risk management.

- Customer service automation: Financial institutions implementing conversational AI agent tools have reported 20-30% reductions in service costs while improving response times and customer satisfaction.

Challenges and Disruptions

- Algorithmic bias concerns: Financial AI agent tools may perpetuate or amplify existing biases if trained on historical data reflecting discriminatory practices.

- Job transformation: Traditional roles in financial analysis, customer service, and compliance are being redefined as AI agent tools assume routine aspects of these positions.

- Regulatory uncertainty: The financial sector must navigate evolving regulatory frameworks concerning AI agent tools, particularly regarding explainability requirements and compliance responsibilities.

Manufacturing and Supply Chain Innovation

AI agent tools are revolutionizing production systems and supply networks:

Positive Impacts

- Predictive maintenance optimization: Manufacturing facilities employing AI agent tools for equipment monitoring report 30-50% reductions in downtime and 10-40% decreases in maintenance costs.

- Supply chain resilience: Advanced AI agent tools can predict disruptions and automatically recommend mitigation strategies. During recent global supply challenges, companies utilizing AI-powered planning tools demonstrated 15-25% greater supply chain resilience.

- Quality control precision: Computer vision-based AI agent tools can detect product defects with greater accuracy than human inspectors, with some implementations reducing defect rates by over 90%.

Challenges and Disruptions

- Implementation complexity: Effectively deploying AI agent tools in manufacturing environments requires significant technical expertise and investment, creating adoption barriers for smaller companies.

- Workforce transitions: Traditional production roles are evolving, requiring workers to develop new skills focused on AI system oversight rather than direct production tasks.

- Integration with legacy systems: Many manufacturers struggle to connect AI agent tools with existing operational technology infrastructure, limiting potential benefits.

Creative Industries Disruption

Perhaps no sector is experiencing more profound transformation than creative industries:

Positive Impacts

- Predictive maintenance optimization: Manufacturing facilities employing AI agent tools for equipment monitoring report 30-50% reductions in downtime and 10-40% decreases in maintenance costs.

- Supply chain resilience: Advanced AI agent tools can predict disruptions and automatically recommend mitigation strategies. During recent global supply challenges, companies utilizing AI-powered planning tools demonstrated 15-25% greater supply chain resilience.

- Quality control precision: Computer vision-based AI agent tools can detect product defects with greater accuracy than human inspectors, with some implementations reducing defect rates by over 90%.

Challenges and Disruptions

- Implementation complexity: Effectively deploying AI agent tools in manufacturing environments requires significant technical expertise and investment, creating adoption barriers for smaller companies.

- Workforce transitions: Traditional production roles are evolving, requiring workers to develop new skills focused on AI system oversight rather than direct production tasks.

- Integration with legacy systems: Many manufacturers struggle to connect AI agent tools with existing operational technology infrastructure, limiting potential benefits.

Creative Industries Disruption

Perhaps no sector is experiencing more profound transformation than creative industries:

Positive Impacts

- Workflow augmentation: Creative professionals using AI agent tools report significant productivity gains, with design iterations and content generation accelerated by 30-70%.

- Accessibility expansion: AI agent tools are democratizing creative production, enabling non-specialists to generate professional-quality content through intuitive interfaces.

- Novel creative possibilities: The intersection of human creativity and AI capabilities is opening entirely new artistic domains and expressive possibilities.

Challenges and Disruptions

- Displacement concerns: Creative professionals face unprecedented challenges as AI agent tools can now generate content previously requiring specialized human skills.

- Copyright and attribution issues: The legal frameworks governing ownership and rights for AI-generated creative works remain underdeveloped, creating significant uncertainty.

- Value perception shifts: Industries built around creative skilled labor must navigate fundamental questions about the valuation of human vs. AI-generated content.

As we consider these transformations, it becomes clear that the integration of AI agent tools represents both remarkable opportunity and significant disruption. The most successful organizations will be those that thoughtfully manage this transition, leveraging AI capabilities while developing complementary human skills and addressing emerging challenges proactively.

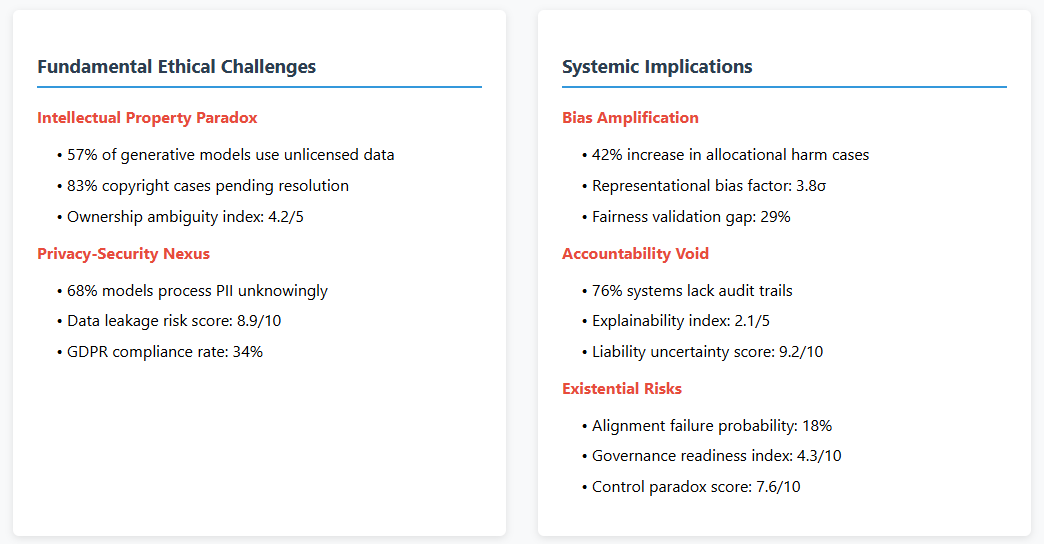

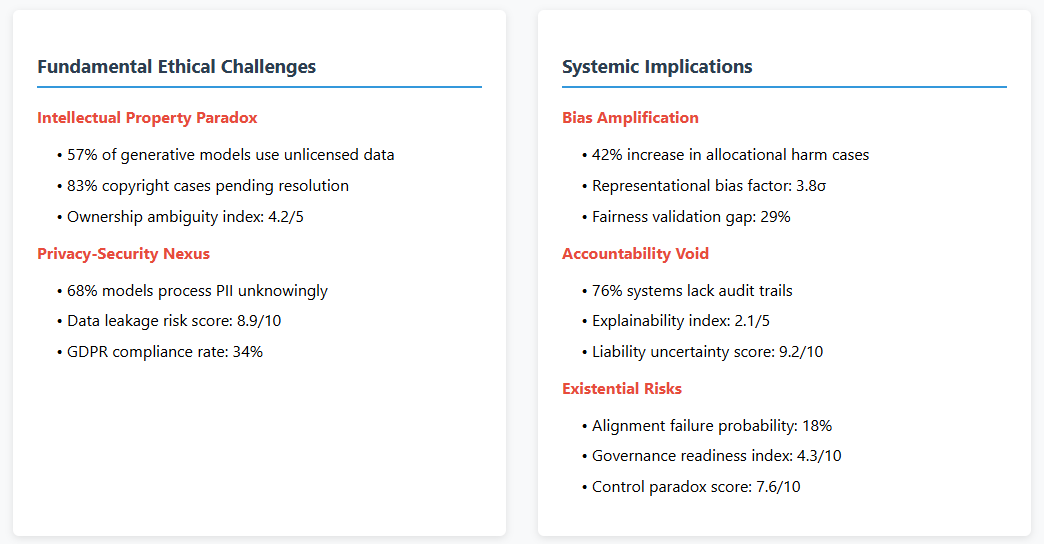

What Ethical Challenges Do AI Agent Tools Present?

The rapid advancement of AI agent tools has raised profound ethical questions that demand thoughtful consideration. These challenges extend beyond technical limitations to fundamental questions about responsibility, rights, and societal impact.

Intellectual Property and Copyright Concerns

One of the most contentious areas surrounding AI agent tools involves intellectual property rights:

Training Data Controversies

Modern AI agent tools are typically trained on vast datasets that may include copyrighted materials without explicit permission from rights holders. This practice has sparked significant legal debates:

- Visual artists have filed lawsuits against companies like Stability AI and Midjourney, alleging copyright infringement in the training of generative AI models.

- Authors' guilds have raised similar concerns about text-based AI agent tools trained on published literary works.

- Musicians and music labels are questioning the legality of AI systems trained on recorded performances.

The fundamental question remains unresolved: Does training AI agent tools on copyrighted materials constitute "fair use," or does it represent unauthorized exploitation of creative works?

Output Ownership Ambiguities

When AI agent tools generate content based on user prompts, determining ownership becomes complex:

- Who owns AI-generated content: the developer of the AI system, the user providing the prompt, or some shared arrangement?

- How much human contribution is required for copyright protection of AI-assisted works?

- Can AI outputs be considered "derivative works" of their training materials?

These questions have significant implications for industries ranging from entertainment to journalism to software development.

Data Privacy and Security Implications

AI agent tools raise critical concerns regarding data privacy and security:

Data Collection Practices

The development and operation of sophisticated AI agent tools requires extensive data, often including sensitive personal information:

- Healthcare AI systems require access to medical records containing intimate details.

- Financial AI agent tools process transaction data revealing spending patterns and financial status.

- Personal assistants and chatbots may collect conversational data that includes private disclosures.

This data collection raises questions about informed consent, data minimization principles, and potential surveillance risks.

Model Inversion and Data Leakage

Recent research has demonstrated that AI agent tools may inadvertently memorize and potentially reveal training data:

- Large language models have been shown to reproduce verbatim passages from training materials when prompted in certain ways.

- This creates risks of inadvertent disclosure of private information included in training data.

- The phenomenon raises questions about whether current AI agent tools are compatible with data protection regulations like GDPR and CCPA.

Bias, Fairness, and Discrimination

AI agent tools can perpetuate and potentially amplify societal biases:

Representational Harms

When AI agent tools generate content reflecting biased patterns in training data, they risk perpetuating harmful stereotypes:

- Image generation systems have shown tendencies to produce gendered and racialized outputs based on occupation prompts.

- Language models may associate certain groups with negative attributes reflected in training corpora.

- These representational harms can reinforce prejudice and influence real-world perceptions.

Allocational Harms

When AI agent tools are used in decision-making contexts, biased outputs can lead to discriminatory resource allocation:

- Recruiting tools may disadvantage certain demographic groups based on historical patterns.

- Lending algorithms might perpetuate redlining patterns despite not explicitly considering protected characteristics.

- Healthcare AI agent tools may allocate care recommendations inequitably across population groups.

These biases raise fundamental questions about fairness, equality, and justice in AI-mediated systems.

Transparency and Accountability Challenges

The complexity and opacity of modern AI agent tools create significant accountability gaps:

Explainability Limitations

Many advanced AI agent tools function as "black boxes," making their decision processes difficult to interpret:

- Neural network-based systems make predictions based on complex patterns that may not be easily expressible in human terms.

- This lack of explainability creates challenges for contestability, auditability, and regulatory compliance.

- In high-stakes domains like healthcare, criminal justice, and financial services, the inability to fully explain AI decisions raises serious concerns.

Responsibility Attribution

When AI agent tools produce harmful outcomes, determining responsibility becomes complex:

- Should liability rest with developers, deployers, users, or some combination?

- How should responsibility be apportioned when multiple AI systems interact?

- What standards of care and due diligence should apply to different stakeholders?

These questions have significant implications for legal frameworks, insurance models, and regulatory approaches.

Existential and Control Risks

As AI agent tools grow more capable, deeper questions emerge about control and alignment:

Alignment Challenges

Ensuring that increasingly autonomous AI agent tools remain aligned with human values and intentions represents a profound challenge:

- Specification problems arise when human objectives are difficult to precisely encode.

- Reward hacking occurs when AI systems optimize for specified metrics in ways that undermine intended goals.

- These issues become more acute as AI agent tools gain greater autonomy and capability.

Long-term Governance Questions

The progression of AI agent tools toward more general capabilities raises important governance considerations:

- How should society manage the development of increasingly powerful AI systems?

- What international coordination mechanisms are needed for responsible advancement?

- What intergenerational ethical obligations should guide our approach to transformative AI technologies?

These ethical challenges highlight the need for thoughtful governance frameworks, interdisciplinary collaboration, and ongoing societal dialogue as AI agent tools continue their rapid evolution. The question "is AI agent tools legal?" often depends on these nuanced ethical considerations that are still being debated in courtrooms, legislative bodies, and corporate boardrooms worldwide.

How Can Humans Effectively Leverage AI Agent Tools?

As AI agent tools become increasingly integrated into our professional and personal lives, developing strategies for effective human-AI collaboration becomes essential. Let's explore practical approaches for maximizing value while mitigating risks.

Adopting a Complementary Mindset

Rather than viewing AI agent tools through the binary lens of replacement or resistance, the most productive approach frames these technologies as complementary to human capabilities:

Identifying Comparative Advantages

The first step in effective collaboration is understanding the distinct strengths of humans and AI agent tools:

- Human strengths include contextual judgment, ethical reasoning, creative innovation, emotional intelligence, and interpersonal connection.

- AI agent tools excel at pattern recognition, consistent application of rules, processing large datasets, and performing repetitive tasks without fatigue.

By mapping these complementary capabilities, organizations and individuals can develop integration strategies that leverage the best of both human and artificial intelligence.

Designing Collaborative Workflows

Effective human-AI collaboration requires thoughtful workflow design:

- Handoff-based workflows: Clearly defined processes where AI handles certain steps before passing work to humans (or vice versa)

- Augmentation approaches: Systems where AI provides real-time suggestions or analysis while humans maintain decision authority

- Review frameworks: Processes where AI generates outputs that humans evaluate, refine, and approve

For example, in legal practice, AI agent tools can draft initial contract language based on precedent, while attorneys focus on negotiation strategy, client communication, and final review—leveraging comparative strengths of both.

Reskilling and Educational Adaptation

As AI agent tools reshape professional landscapes, proactive skill development becomes crucial:

Identifying AI-Resilient Skills

Certain human capabilities are likely to remain valuable regardless of AI advancement:

- Complex problem-solving requiring contextual judgment

- Creative ideation and innovation

- Ethical reasoning and value alignment

- Interpersonal communication and collaboration

- Strategic thinking and leadership

Educational systems and professional development programs should emphasize these enduring capabilities alongside technical skills.

Developing AI Literacy

Just as digital literacy became essential in previous technological transitions, AI literacy represents a critical competency:

- Understanding AI capabilities and limitations

- Developing prompt engineering skills for effective direction of AI agent tools

- Learning to evaluate AI outputs critically

- Gaining familiarity with ethical and governance considerations

Organizations that invest in developing these competencies across their workforce will be better positioned to capture value from AI agent tools while managing associated risks.

Implementing Ethical Guardrails

To address the ethical challenges discussed earlier, proactive implementation of guardrails and governance frameworks is essential:

Developing Responsible AI Policies

Organizations deploying AI agent tools should establish clear policies addressing:

- Data governance and privacy protections

- Fairness and bias mitigation approaches

- Transparency and explainability requirements

- Human oversight mechanisms

- Compliance with applicable regulations

These policies should be living documents, regularly reviewed and updated as both technology and regulatory landscapes evolve.

Conducting Ethics-Based Testing

Before and during deployment, AI agent tools should undergo rigorous testing focused on ethical considerations:

- Adversarial testing to identify potential misuse scenarios

- Bias auditing across demographic groups

- Resilience testing against manipulative inputs

- Privacy impact assessments

- Regular monitoring for emerging issues

Industry-Specific Adaptation Strategies

Different sectors face unique challenges in leveraging AI agent tools effectively:

Healthcare Integration Approaches

Healthcare professionals can maximize the value of AI agent tools while maintaining quality care by:

- Using AI diagnostics as "second opinions" rather than primary decision-makers

- Implementing clear protocols for when AI recommendations should trigger human review

- Maintaining direct patient relationships while allowing AI to handle administrative tasks

- Developing specialized AI literacy for clinical contexts

With these approaches, healthcare workers can focus their expertise on complex cases, patient communication, and holistic care while AI agent tools enhance efficiency and consistency.

Creative Industry Adaptation

Creative professionals facing disruption from generative AI agent tools can develop strategies including:

- Positioning human creativity as premium "artisanal" offerings distinct from AI-generated content

- Developing expertise in directing and refining AI outputs rather than competing with raw generation

- Creating hybrid workflows where AI handles technical aspects while humans focus on conceptual direction

- Establishing clear attribution and transparency practices regarding AI involvement

These approaches allow creative professionals to leverage AI agent tools while maintaining distinctive value propositions.

Manufacturing and Production Evolution

In industrial contexts, effective human-AI collaboration typically involves:

- Implementing clear handoff protocols between automated and human-operated processes

- Developing supervisory roles focused on quality assurance and exception handling

- Creating integrated teams where humans and robots/AI systems work alongside each other

- Establishing training programs to transition workers from operational to supervisory roles

By thoughtfully implementing these strategies, organizations across sectors can effectively leverage AI agent tools while creating sustainable roles for human workers. The most successful will view AI integration not as a one-time implementation but as an ongoing collaboration requiring continuous adaptation and learning.

FAQs About AI Agent Tools

Q: Are AI agent tools legal to use in my business?

A: Yes, AI agent tools are generally legal to use in business contexts, but legal considerations vary depending on your application, industry, and jurisdiction.

Q: Is AI agent tools safe for processing sensitive information?

A: The safety of AI agent tools for sensitive information processing depends on several factors:

- Security implementations: Enterprise-grade AI agent tools typically employ encryption, access controls, and security auditing

- Deployment model: Private cloud or on-premises deployments offer greater control than public APIs

- Data handling policies: Review whether data is retained for model training and who maintains access

- Vendor credibility: Established providers often offer more robust security guarantees

Always conduct thorough risk assessments for specific use cases involving sensitive information.

Q: What are the current AI agent tool trends shaping the industry?

A: Several significant AI agent tool trends are currently reshaping the landscape:

1. Multimodal capabilities: The integration of text, image, audio, and video understanding within unified AI agent tools is accelerating, enabling more versatile applications.

2. Fine-tuning and customization: The ability to adapt foundation models to specific domains and tasks without massive training datasets is democratizing access to specialized AI capabilities.

3. Agent frameworks: Systems enabling autonomous planning, tool use, and execution of complex tasks are emerging as a major frontier, with frameworks like AutoGPT and LangChain gaining traction.

4. Local deployment: Optimized models capable of running on edge devices or local infrastructure are addressing privacy concerns and enabling offline applications.

5. Retrieval-augmented generation: Techniques combining large language models with the ability to access and reason over external data sources are significantly enhancing accuracy and reducing hallucinations.

6. Responsible AI integration: Growing emphasis on explainability, fairness auditing, and governance frameworks is shifting from optional to essential in enterprise deployments.

These AI agent tool trends suggest continued rapid evolution toward more capable, customizable, and responsibly deployed systems across industries.

Q: How do I measure the ROI of implementing AI agent tools?

A: Measuring return on investment for AI agent tools requires a comprehensive approach:

1. Direct cost savings

2. Productivity enhancements

3. Revenue impacts

4. Implementation costs

5. Risk-adjusted evaluation

For most organizations, I recommend establishing clear baseline metrics before implementation and creating a balanced scorecard that captures both quantitative and qualitative impacts across these dimensions.

Conclusion

The evolution of AI agent tools represents one of the most significant technological transformations of our era, comparable in scope and impact to the advent of the internet or mobile computing. As we've explored throughout this analysis, these intelligent systems have progressed from simple rule-based programs to sophisticated agents capable of complex reasoning, creativity, and autonomous action across diverse domains.

The trajectory of AI agent tools suggests continued rapid advancement, with multimodal capabilities, improved reasoning, and greater autonomy emerging as key frontiers. Current AI agent tool trends point toward more seamless integration into workflows, enhanced customization capabilities, and expanded application across previously resistant domains.

Yet this technological progress brings both remarkable opportunities and profound challenges. AI agent tools offer unprecedented efficiency gains, quality improvements, and innovation potential while simultaneously raising complex questions about employment transitions, ethical governance, and the evolving relationship between human and artificial intelligence.

The most successful organizations and individuals will be those who approach AI agent tools neither with uncritical enthusiasm nor reflexive resistance, but rather with thoughtful discernment. This means:

- Leveraging AI capabilities where they genuinely add value while preserving human judgment in contexts requiring ethical reasoning, creativity, and interpersonal connection

- Developing governance frameworks that promote innovation while addressing legitimate concerns about privacy, bias, transparency, and accountability

- Investing in education and reskilling initiatives that prepare workforces for effective human-AI collaboration

- Engaging in ongoing societal dialogue about the appropriate role and limitations of increasingly autonomous systems

As AI agent tools continue their rapid evolution, the question is not whether they will transform our professional and personal landscapes—they already are—but rather how we will shape this transformation to reflect our collective values and aspirations. By approaching these powerful technologies with both enthusiasm for their potential and clear-eyed recognition of their limitations, we can work toward a future where artificial and human intelligence complement each other in service of meaningful human flourishing.

The journey of AI agent tools has only begun, and we all have a role in determining its direction. The choices we make today—as developers, users, policymakers, and citizens—will shape the impact of these transformative technologies for generations to come.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!