In recent years, AI voice cloning is definitely the most eye-catching and disruptive technology in the field of artificial intelligence. We have witnessed its development from simple speech synthesis to the current complex system that can highly restore the human voice. It has not only completely changed the way we interact with machines, but also brought unlimited possibilities to all walks of life.

So the question is - what is AI voice cloning? How did it evolve from the initial voice technology to the powerful tool it is today? In this blog, we will talk about its evolution, its current capabilities, limitations, and various ethical issues surrounding this technology.

In fact, the matter of making machines "speak" has been studied in the field of artificial intelligence for many years. And AI voice cloning goes a step further. Its goal is not only to synthesize speech, but to "copy" the characteristics of a person's voice. Unlike ordinary text-to-speech, voice cloning can imitate a person's timbre, intonation, and even emotional nuances.

This ability has a very wide impact, whether it is content creation, barrier-free technology, or the entertainment industry, there is a huge space for application. Of course, it also comes with some risks, such as the problem of abuse. Next, let’s take a deeper look at how AI voice cloning technology has developed, what stage it is in now, and where it will go in the future.

How has AI voice cloning technology evolved?

The journey of AI voice cloning began with simple concatenative synthesis systems in the early 2000s that stitched together pre-recorded voice clips. These early attempts at speech synthesis were robotic and easily distinguishable from human speech.

Early Stages of Speech Synthesis

The initial stages of speech synthesis focused on creating clear and understandable speech rather than imitating specific sounds. Systems like text-to-speech engines prioritized clarity over personalization. These technologies relied heavily on linguistic rules and phonetic principles to build artificial voices.

Breakthroughs in statistical parametric speech synthesis techniques, especially Hidden Markov Models (HMMs), led to more natural voices. Products like Loquendo TTS and Acapela Group made significant progress in speech synthesis, but still retained an artificial sound quality that was easily recognizable.

Deep Learning Revolution

The deep learning revolution around 2016 brought about a sea change in AI voice cloning. Google's WaveNet marked a paradigm shift by using neural networks to generate raw audio waveforms. This approach was able to generate more natural speech with more accurate intonation and rhythm.

Following WaveNet, in 2018 Lyrebird (later acquired by Descript) emerged as one of the first companies to offer personalized voice cloning services from just one minute of recording. This marked a huge leap forward in the accessibility and ease of use of voice cloning technology.

By 2018-2019, we saw the rise of Google Tacotron 2 and Baidu Deep Voice 3, which further refined the neural approach to speech synthesis. These systems are able to generate extremely human-like speech with appropriate stress and intonation patterns.

Current state of AI voice cloning

Today's AI voice cloning technology uses advanced architectures that combine Transformer models with Generative Adversarial Networks (GANs). Voice cloning services provided by companies such as ElevenLabs, Resemble.AI, and Play.ht can replicate a full voice from just 30 seconds of sample audio.

Modern AI voice cloning tools incorporate several key technologies:

1. Neural vocoding: Converting spectral features into waveforms

2. End-to-end neural synthesis: Generating speech directly from text input

3. Zero-shot and few-shot learning: Cloning speech with minimal training data

4. Emotion and style transfer: Copying not only voice quality but also emotional tone

The latest systems can handle multilingual content, maintain consistency across long texts, and even preserve accents and voice idiosyncrasies. For example, Descript’s Overdub feature allows content creators to edit audio by simply editing the text, and the AI seamlessly fills in the speech.

Is AI voice cloning legal? Generally speaking, it is legal with proper consent and for legitimate purposes. However, specific regulations vary by jurisdiction, and cloning someone else’s voice without authorization may violate privacy laws or publicity rights.

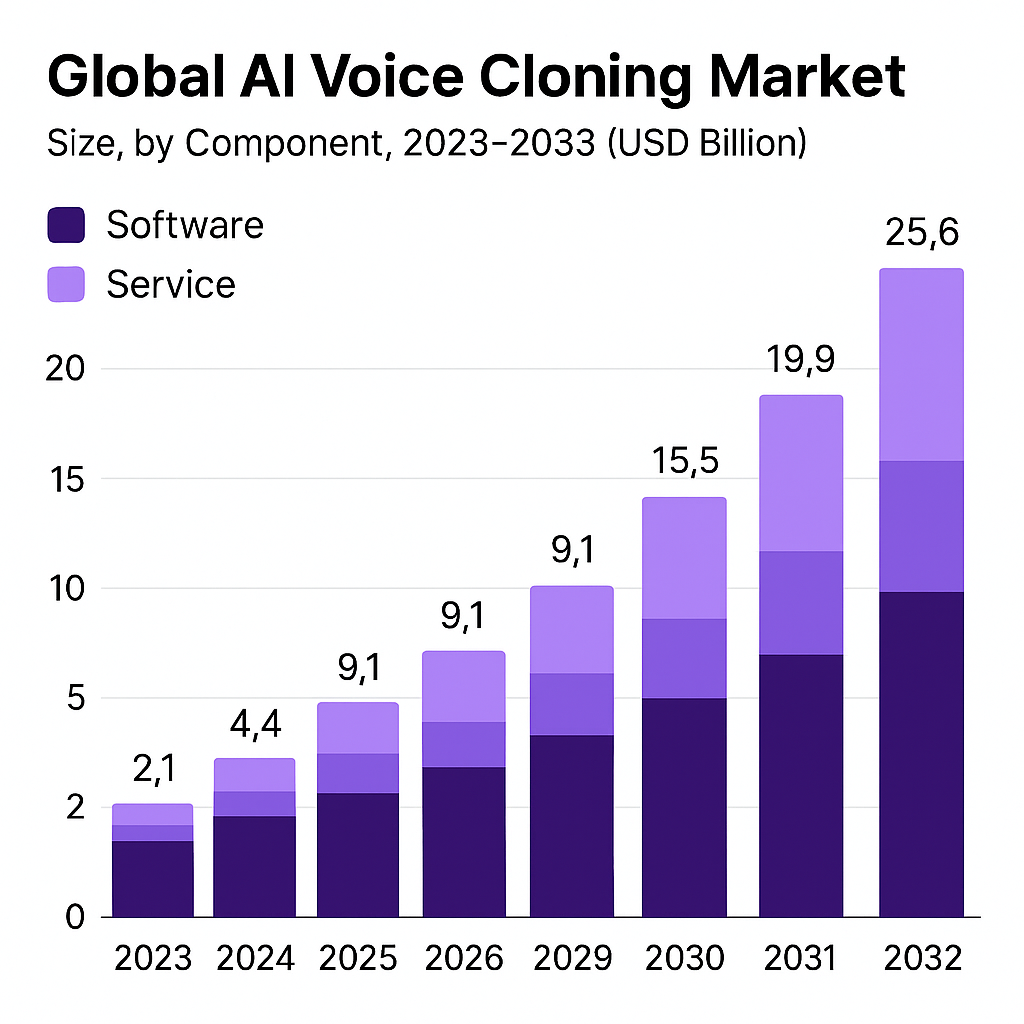

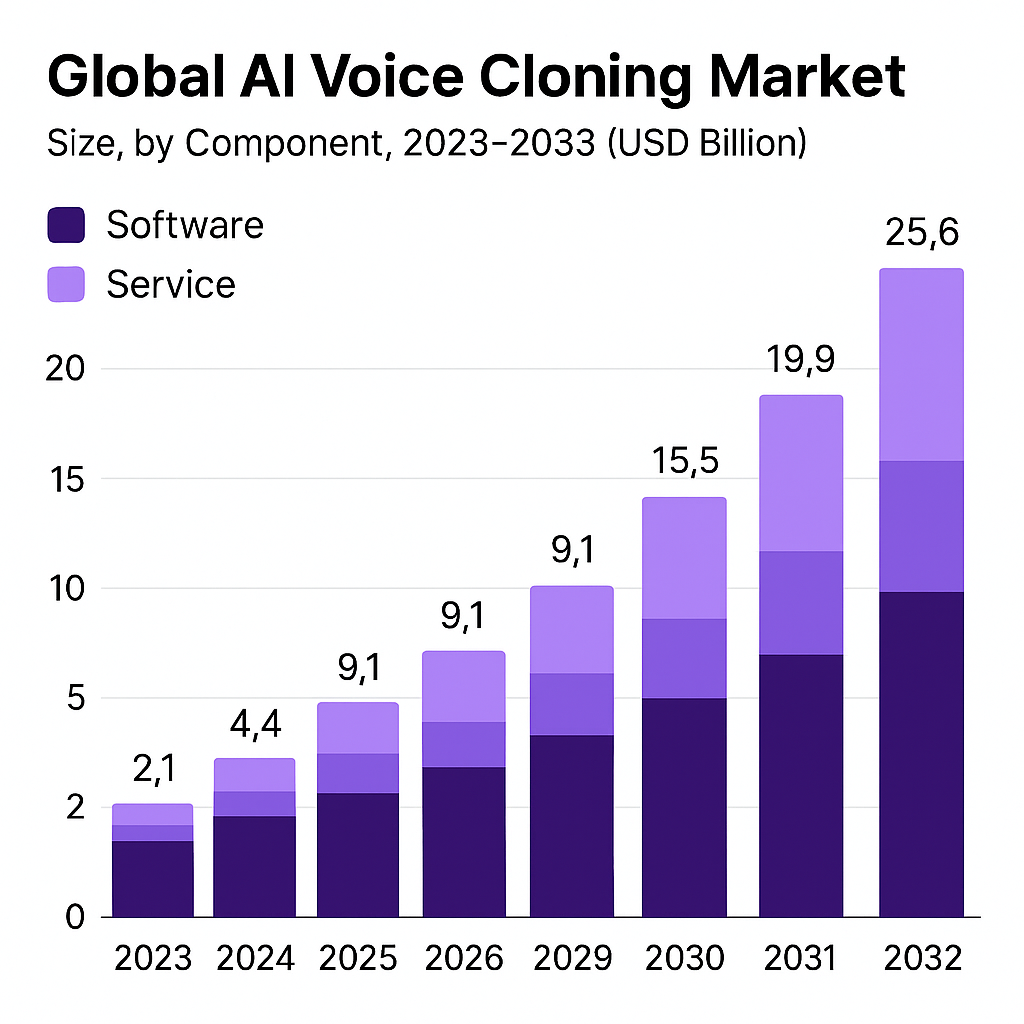

Data Source

Data Source

What Are the Advantages and Limitations of AI Voice Cloning?

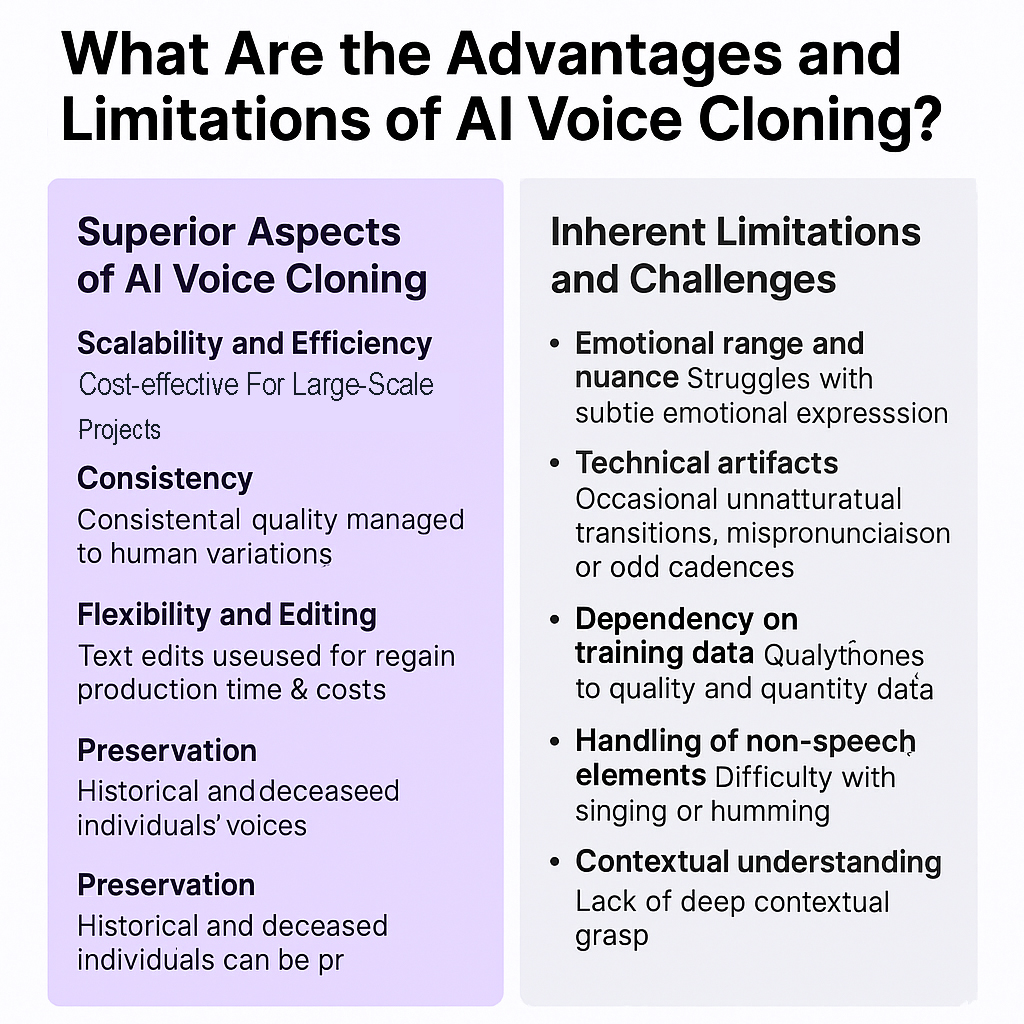

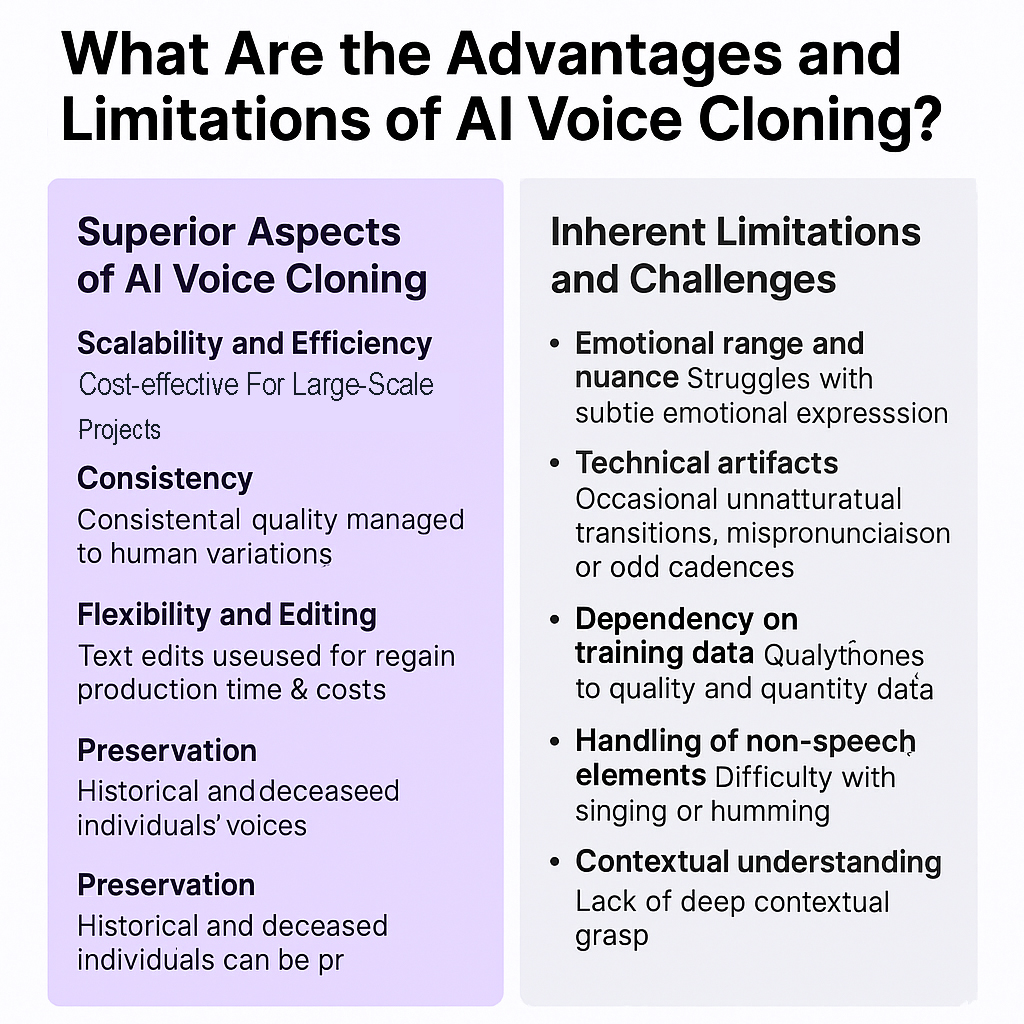

Superior Aspects of AI Voice Cloning

When compared to human voice recording, AI voice cloning offers several distinct advantages:

Scalability and Efficiency: Once a voice model is trained, it can generate unlimited content without requiring the original speaker to record anything. This makes it incredibly cost-effective for large-scale projects. For example, a company can localize their advertisements into dozens of languages without booking multiple recording sessions.

Consistency: Human voice actors may have variations in their performance due to factors like health, mood, or time of day. AI voice cloning delivers consistent quality regardless of when content is generated. This consistency is particularly valuable for long-form content like audiobooks or extensive educational materials.

Accessibility: AI voice cloning enables people who have lost their voice due to medical conditions to regain a synthetic version of their own voice. Companies like VocaliD and ModelTalker have helped ALS patients preserve their vocal identity through AI voice banking.

Flexibility and Editing: Making changes to AI-generated speech requires simple text edits rather than re-recording sessions. This flexibility dramatically reduces production time and costs. According to industry data, post-production editing time can be reduced by up to 80% when using AI voice cloning instead of traditional voice recording.

Preservation: Historical voices or those of deceased individuals can be preserved and even used to create new content (with appropriate permissions). This has applications in museums, documentaries, and educational materials.

Inherent Limitations and Challenges

Despite its impressive capabilities, AI voice cloning faces several significant limitations:

Emotional Range and Nuance: While AI voice cloning has improved dramatically, it still struggles with conveying subtle emotional nuances that human actors deliver effortlessly. The most sophisticated AI voice cloning tools still have difficulty with authentic-sounding laughter, crying, or conveying complex emotional states like sarcasm or wistfulness.

Technical Artifacts: Even the best systems occasionally produce artifacts like unnatural transitions, mispronunciations of unusual words, or strange cadences in certain contexts. These artifacts occur because the models don't truly understand the semantic content they're vocalizing—they're pattern-matching based on training data.

Dependency on Training Data: The quality of an AI voice clone directly correlates with the quality and quantity of training data. Noisy recordings, inconsistent speech patterns, or limited samples result in lower-quality clones. This presents a particular challenge when trying to clone voices from historical recordings or limited source material.

Handling of Non-Speech Elements: AI voice cloning often struggles with non-speech vocal elements like singing, humming, or unusual vocal techniques. While specialized systems exist for music and singing, they typically require separate models from speech synthesis.

Contextual Understanding: Current AI voice cloning systems lack deep contextual understanding of the content they're reading, which can lead to inappropriate emphasis or tone for certain passages. This limitation necessitates human review and editing for critical applications.

Is AI voice cloning safe? While the technology itself is neutral, its safety depends entirely on implementation, usage policies, and security measures. Without proper safeguards, voice cloning can pose significant risks, as we'll explore later.

How Is AI Voice Cloning Impacting Various Industries?

The influence of AI voice cloning extends across numerous sectors, creating both opportunities and challenges. Let's examine these impacts across several key industries:

Entertainment and Media

In film and television, AI voice cloning has transformed post-production processes. Studios can now use voice cloning to fix dialogue issues without bringing actors back for expensive re-recording sessions.

The gaming industry has embraced AI voice cloning to expand the dialogue possibilities for characters without requiring voice actors to record thousands of additional lines. This allows for more dynamic storytelling and reduces production costs. Companies like Sonantic (acquired by Spotify) specialize in creating emotional AI voices for games and interactive media.

However, this technology poses threats to voice actors, particularly those who perform in commercials and narration. Jobs that once required unique human talent can now be automated once an initial voice model is created. Voice actors' unions like SAG-AFTRA have begun addressing this issue through contract negotiations to ensure fair compensation when an actor's voice is cloned.

Healthcare and Accessibility

In healthcare, AI voice cloning offers remarkable benefits for patients with speech impairments. Organizations like VocaliD create personalized synthetic voices for individuals who have lost their ability to speak due to conditions like ALS, stroke, or throat cancer.

For people with reading disabilities or visual impairments, AI voice cloning enables the rapid creation of high-quality audiobooks and educational materials in voices that are engaging and natural. This accessibility enhancement helps reduce barriers to education and information.

The COVID-19 pandemic accelerated the adoption of AI voice cloning in telehealth, allowing for multilingual communication and clear health instructions to diverse patient populations when in-person interpreters weren't available.

Customer Service and Corporate Communications

Many companies have implemented AI voice cloning to standardize their brand voice across different channels. Instead of hiring multiple voice actors for different campaigns, a single voice can be cloned and deployed consistently across all customer touchpoints.

Virtual assistants and IVR (Interactive Voice Response) systems increasingly use voice cloning to create more personalized and human-like interactions. According to industry research, customers respond more positively to AI voices that sound natural and consistent.

However, this shift threatens traditional call center jobs, as companies can now automate more complex customer interactions with convincing AI voices. The solution may lie in transitioning these workers to oversight and quality assurance roles for AI systems rather than direct customer communication.

Education and Training

Educational institutions are using AI voice cloning to create personalized learning experiences. A teacher's voice can be cloned to provide feedback and guidance even when they're not physically present, maintaining a consistent learning relationship.

Language learning applications benefit significantly from AI voice cloning by generating native-sounding speech in multiple languages and dialects. This helps learners develop more authentic pronunciation and listening skills.

Corporate training programs use voice cloning to create consistent training materials across global operations, ensuring that all employees receive the same quality of instruction regardless of location.

To address the ethical concerns in these applications, companies and institutions should:

1. Obtain explicit consent from voice talent

2. Be transparent about AI usage

3. Fairly compensate voice contributors

4. Implement robust security measures to prevent unauthorized access

AI voice cloning tools are becoming increasingly accessible, with platforms like ElevenLabs, Play.ht, and Resemble.AI offering varying levels of sophistication and customization. These tools have democratized access to high-quality voice synthesis, but with this accessibility comes responsibility.

What Ethical Concerns Does AI Voice Cloning Raise?

The rapid advancement of AI voice cloning technology has outpaced ethical frameworks and regulations, leading to several serious concerns:

Consent and Ownership

One of the most fundamental ethical issues surrounds consent. Who owns a person's voice, and can it be cloned without permission? While professional voice actors might have contractual protections, ordinary individuals could have their voices cloned from publicly available recordings.

The legal landscape remains murky, with voice not clearly protected in many jurisdictions. In the United States, only a handful of states recognize a "right of publicity" that might cover voice ownership. The European GDPR offers some protection by classifying voice data as biometric information, but global standards are inconsistent.

Misinformation and Deep Fakes

Perhaps the most alarming ethical concern is the role of AI voice cloning in creating convincing misinformation. Combined with other deepfake technologies, cloned voices can create fabricated scenarios that appear authentic.

Political manipulation represents a significant threat, as fabricated audio of politicians making inflammatory statements could influence elections or international relations. The capacity to generate fake news at scale with authentic-sounding voices undermines trust in authentic media sources.

Recent incidents demonstrate this danger. In 2023, AI-generated phone calls impersonating political candidates were reported during local elections in several countries. Detection technologies struggle to keep pace with generation capabilities, creating an asymmetric advantage for bad actors.

Privacy and Security Risks

Voice contains unique biometric information that can be used for authentication purposes. As financial institutions and other services adopt voice verification, AI voice cloning poses serious security risks.

Identity theft becomes more sophisticated when attackers can clone voices. Traditional security measures like knowledge-based authentication may be inadequate when combined with social engineering and voice cloning.

The Federal Trade Commission (FTC) has noted a significant increase in voice cloning scams targeting elderly individuals. These scams often impersonate loved ones claiming to be in emergencies and requesting immediate financial assistance.

Economic and Labor Impacts

The displacement of voice actors, narrators, and other professionals represents a significant ethical concern. While AI creates new opportunities, it also eliminates traditional career paths in voice work.

Questions about fair compensation remain unresolved. If a voice actor's voice is cloned, should they receive ongoing royalties for all content generated with their voice? Current industry practices vary widely, with many voice professionals reporting unfair terms.

Cultural and Artistic Integrity

Voice cloning raises questions about posthumous usage of voices. Is it ethical to recreate the voices of deceased individuals for new content they never consented to? The recreation of celebrity voices for commercial purposes has already generated controversy and litigation.

There are also concerns about authenticity and artistic integrity. If AI can perfectly mimic human emotional expression, does this devalue genuine human artistry and performance? Some argue that knowing content was human-created carries inherent value that AI-generated content lacks.

Is AI voice cloning legal? While the technology itself isn't illegal, its application can violate various laws depending on usage. Unauthorized impersonation for fraud is clearly illegal, while creative applications with proper consent may be perfectly legitimate.

How Can We Responsibly Utilize AI Voice Cloning?

To harness the benefits of AI voice cloning while mitigating its risks, we need a multi-faceted approach:

Developing Robust Consent Frameworks

Clear, informed consent mechanisms must be established for voice data collection and usage. This includes:

- Explicit opt-in consent rather than buried terms of service

- Transparent disclosure of how voice data will be used

- Options to revoke consent and have voice models deleted

- Temporal and context limitations on voice model usage

Industry standards should include Voice Rights Agreements (VRAs) that outline compensation, usage limits, attribution requirements, and termination conditions. Organizations like the Voice Actor's Guild have proposed model contracts that balance innovation with protection.

Implementing Technical Safeguards

Technical solutions can help mitigate misuse:

Watermarking: Embedding inaudible markers in AI-generated audio to enable detection. Companies like Adobe have pioneered Content Authenticity Initiative tools that embed origin information in digital content.

Voice Verification Systems: Developing more sophisticated authentication that can distinguish between live human speech and cloned voices. These systems analyze micro-patterns in speech that current AI systems cannot replicate.

Platform Policies: Major content distribution platforms should implement detection systems and clear policies regarding AI-generated voice content. YouTube, TikTok, and other platforms have begun developing policies but enforcement remains challenging.

Regulatory and Legal Approaches

Regulatory frameworks must evolve to address AI voice cloning specifically:

- Creating clear legal definitions of voice ownership and rights

- Establishing penalties for fraudulent or harmful use of voice cloning

- Requiring transparency in AI-generated content

- Developing international standards to prevent regulatory arbitrage

The EU's AI Act represents the most comprehensive regulatory approach to date, categorizing certain voice cloning applications as "high-risk" and imposing transparency and security requirements.

Industry Self-Regulation

Companies developing AI voice cloning tools have a responsibility to:

- Implement know-your-customer verification for high-risk applications

- Develop and enforce acceptable use policies

- Conduct ethical impact assessments before deploying new capabilities

- Engage with affected stakeholders, particularly voice professionals

Leading companies like ElevenLabs have implemented abuse prevention features that require identity verification for certain uses and restrict cloning of voices without proper documentation.

Addressing Industry Disruption

For industries disrupted by AI voice cloning, several approaches can help manage the transition:

1. Skills Transition Programs: Training voice professionals in AI prompt engineering, quality control, and direction.

2. New Business Models: Developing voice licensing platforms where voice actors can offer their voices for ethical cloning with fair compensation.

3. Hybrid Approaches: Creating workflows that combine human creativity with AI efficiency.

4. Union Agreements: Negotiating collective bargaining agreements that address AI usage, similar to those recently established in the film industry.

Education and Awareness

Public education about AI voice cloning is essential:

- Media literacy programs that teach detection of AI-generated content

- Consumer awareness campaigns about voice phishing threats

- Technical education for creators about ethical use of voice technology

- Corporate training about security protocols related to voice verification

Is AI voice cloning safe? It can be when deployed with proper safeguards, transparent policies, and clear consent mechanisms. The technology itself is neutral—it's the implementation and governance that determine safety.

FAQs About AI Voice Cloning

Q: How much audio is needed to clone someone's voice?

A: Modern AI voice cloning tools require varying amounts of sample audio, from as little as 30 seconds with systems like ElevenLabs to 5+ minutes for higher quality results. The quality and quantity of the training data directly impact the accuracy of the clone.

Q: Can AI voice cloning detect emotions?

A: While AI voice cloning can simulate emotions, it doesn't actually detect or understand them. Systems are trained to mimic emotional patterns in speech, but they lack genuine emotional intelligence. The latest systems can replicate emotional styles with increasing accuracy, but they're mimicking rather than experiencing emotions.

Q: How can I protect myself from voice cloning scams?

A: Establish verification codewords with family members, be suspicious of unexpected urgent requests for money, call back on known numbers to verify identities, and use multi-factor authentication for sensitive accounts. Never share extended voice samples on public platforms without understanding how they might be used.

Q: Are there ways to detect AI-cloned voices?

A: Yes, though detection technology lags behind generation capabilities. Listen for inconsistent breathing patterns, unusual transitions between words, unnatural emotional shifts, and processing artifacts. Professional detection tools analyze spectral inconsistencies invisible to human ears.

Q: Who owns the copyright to content created with AI voice cloning?

A: Copyright law varies by jurisdiction, but generally, the copyright for AI-generated content may belong to the person who arranged for its creation. However, using someone's voice without permission could violate other rights regardless of copyright ownership. This remains a legally complex area.

Q: Does AI voice cloning work equally well in all languages?

A: No. AI voice cloning typically performs best in widely-spoken languages like English, Spanish, and Mandarin that have abundant training data. Performance in low-resource languages or specific dialects is often lower quality. Companies are actively working to improve multilingual capabilities.

Conclusion: Navigating the Voice Cloning Revolution

AI voice cloning represents one of the most powerful and potentially disruptive AI technologies available today. Its rapid evolution from rudimentary voice synthesis to sophisticated neural models has opened tremendous possibilities across industries while simultaneously raising profound ethical questions.

As we've explored throughout this article, AI voice cloning offers remarkable benefits: giving voice to those who have lost theirs, creating more accessible content, enabling creative possibilities previously unimaginable, and streamlining production processes. These advantages explain why AI voice cloning tools have gained such widespread adoption.

However, we cannot ignore the significant challenges this technology presents. The potential for fraud, misinformation, privacy violations, and economic disruption demands our attention. Without proper safeguards, consent frameworks, and regulatory oversight, AI voice cloning could cause substantial harm.

The responsible path forward requires collaboration between technology developers, industry stakeholders, regulators, and the public. We need technical solutions like watermarking and detection systems, legal frameworks that clarify voice rights, industry standards for ethical use, and educational initiatives that promote awareness.

The future of AI voice cloning will be determined not by technological capabilities alone but by the choices we make about how to govern and apply those capabilities. By approaching these decisions thoughtfully, we can ensure that AI voice cloning enhances human communication rather than undermining trust in it.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!