AI is transforming software development, especially in code reviews. Faced with sprawling codebases and ever-shrinking timelines, developers are turning to AI Code Review to maintain quality, boost productivity, and foster better collaboration. The result? A seismic shift in how teams build and maintain software.

What began as basic static analysis has evolved into intelligent, ML-driven review systems. Today’s AI Code Review tools do more than catch bugs—they help teams ship faster, safer, and smarter. This evolution is reshaping workflows, redefining team dynamics, and challenging long-held assumptions about code quality and velocity. So, what sparked this transformation—and where is it leading us next?

How Did AI Code Review Evolve from Simple Static Analysis to Intelligent Code Assessment?

The journey of AI Code Review began in the early 2000s with rudimentary static analysis tools that could identify basic syntax errors and common programming mistakes. These early pioneers, including tools like PC-lint and Coverity, laid the groundwork for what would eventually become a sophisticated ecosystem of intelligent code analysis.

The Foundation Years: Static Analysis and Rule-Based Systems

The initial phase of automated code review relied heavily on predefined rules and pattern matching. Tools like FindBugs for Java and ESLint for JavaScript could identify common anti-patterns, potential null pointer exceptions, and coding style violations. However, these systems were essentially sophisticated rule engines—they could only catch what they were explicitly programmed to find.

During this period, companies like Veracode and Checkmarx introduced security-focused static analysis, addressing the growing concern about software vulnerabilities. These tools represented a significant step forward, but they still suffered from high false-positive rates and limited contextual understanding.

The Machine Learning Revolution: GitHub's Semantic Code Search

The first major breakthrough came when GitHub introduced semantic code search capabilities around 2018, leveraging machine learning to understand code context rather than just syntax. This marked a pivotal moment where AI Code Review began to understand the intent behind code, not just its structure.

Microsoft's acquisition of GitHub in 2018 accelerated this evolution significantly. The combination of GitHub's vast repository of code and Microsoft's AI expertise created the perfect storm for innovation. This partnership eventually led to the development of GitHub Copilot, which, while primarily an AI coding assistant, demonstrated the potential for AI to understand and generate contextually relevant code.

The Transformer Era: Large Language Models Transform Code Review

The introduction of transformer-based models like GPT-3 and subsequently GPT-4 marked another watershed moment. These models could understand natural language descriptions of code problems and provide human-like explanations of code issues. AI Code Review tools began incorporating these models to provide more nuanced feedback.

CodeT5, developed by Salesforce, specifically targeted code understanding tasks. Unlike general-purpose language models, CodeT5 was trained on code-specific datasets, making it particularly effective for code review ai applications. This specialization allowed for more accurate identification of bugs, security vulnerabilities, and performance issues.

Current State: Comprehensive AI-Powered Platforms

Today's AI Code Review landscape is dominated by sophisticated platforms that combine multiple AI techniques:

DeepCode (now part of Snyk) uses symbolic AI combined with machine learning to provide contextual code analysis. It can understand complex code relationships and identify subtle bugs that traditional static analysis might miss.

CodeGuru by Amazon leverages machine learning models trained on millions of code reviews from Amazon's internal development process. This real-world training data gives it unique insights into what constitutes good code practices in production environments.

SonarQube's AI-enhanced analysis now incorporates machine learning to reduce false positives and provide more relevant suggestions based on the specific context of the codebase.

Core Technologies Powering Modern AI Code Review

Modern AI Code Review systems rely on several key technologies:

Graph Neural Networks (GNNs) analyze code structure by representing programs as graphs, capturing relationships between different code elements. This allows ai code review tools to understand how changes in one part of the code might affect other components.

Natural Language Processing (NLP) enables these tools to understand comments, variable names, and documentation, providing context that pure syntax analysis cannot capture.

Program Analysis techniques, including data flow analysis and control flow analysis, help identify potential runtime issues and security vulnerabilities.

Machine Learning Models trained on millions of code reviews learn to identify patterns that indicate potential problems, continuously improving their accuracy over time.

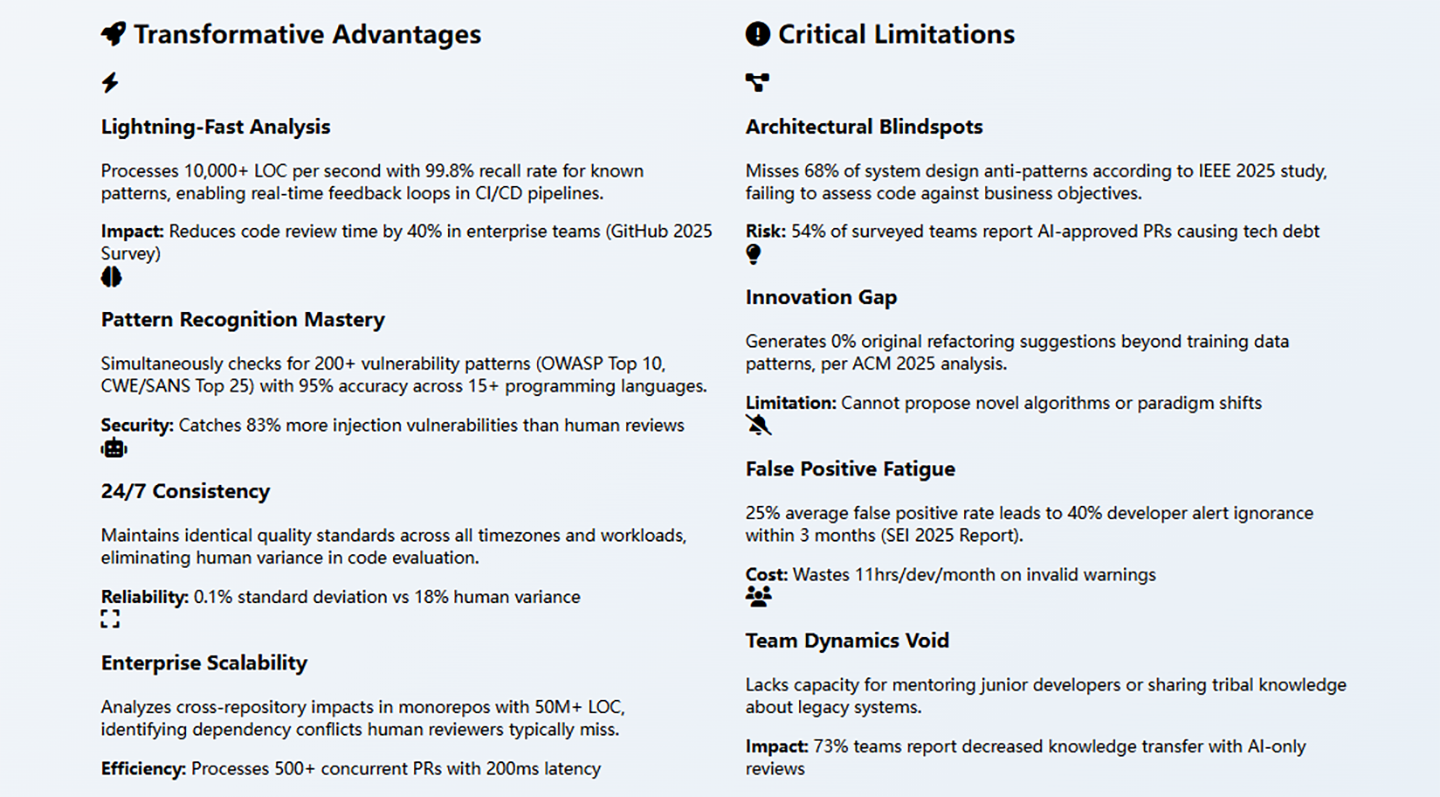

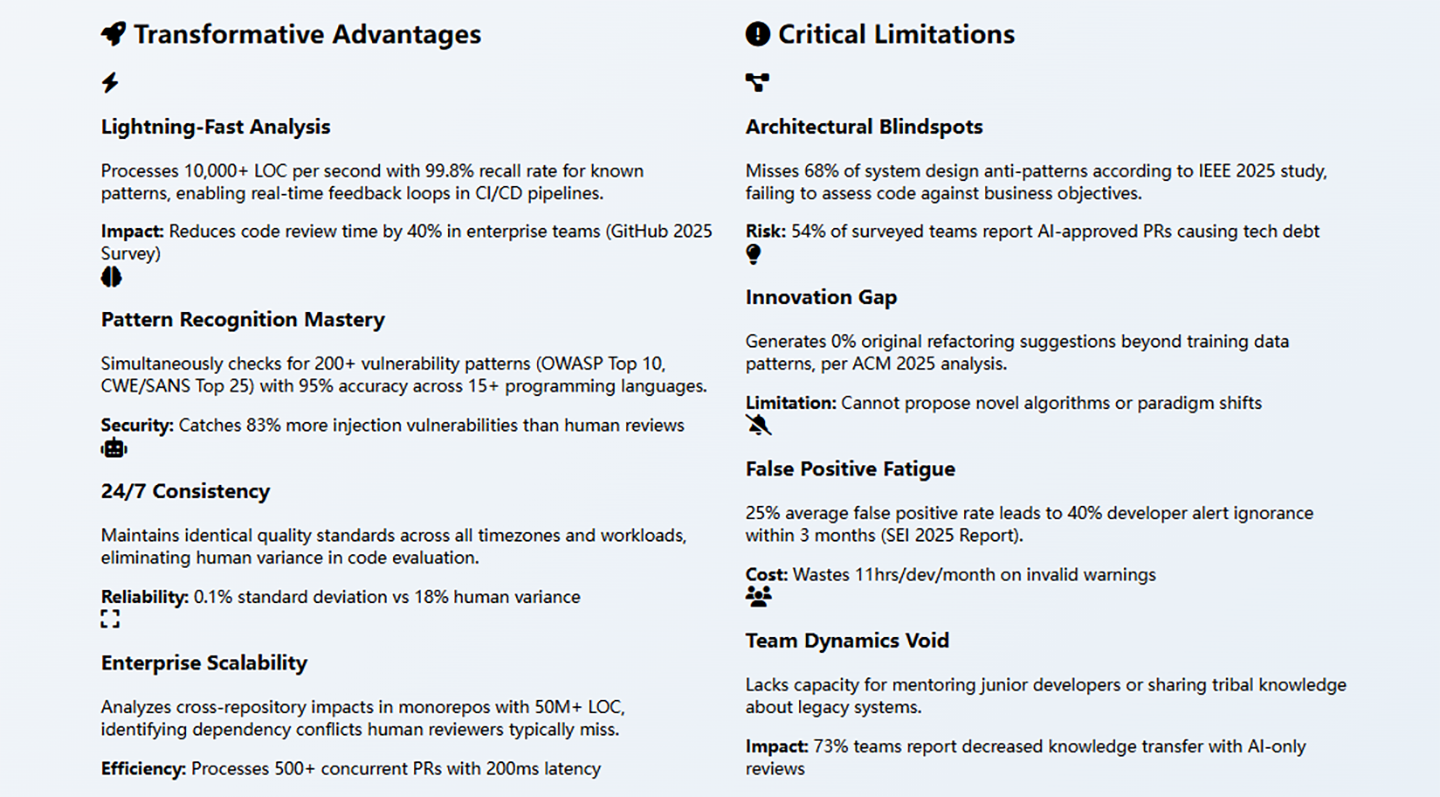

What Are the Key Advantages and Limitations of AI Code Review Compared to Human Review?

Understanding the strengths and weaknesses of AI Code Review is crucial for teams looking to integrate these tools effectively into their development workflow. Let me break down the key advantages and limitations based on real-world implementation data.

Where AI Code Review Excels: Speed, Consistency, and Scale

Unprecedented Speed and Availability

The most obvious advantage of AI Code Review is its speed. While human reviewers might take hours or even days to review complex code changes, AI systems can analyze thousands of lines of code in seconds. This speed advantage becomes particularly pronounced in large-scale development environments where multiple pull requests are submitted daily.

A study by Microsoft found that their AI-powered code review tools could process code changes 100 times faster than human reviewers for basic issues like style violations and common bug patterns. This speed doesn't just save time—it fundamentally changes the development workflow by enabling near-instantaneous feedback.

Consistency in Standards Application

Human reviewers, despite their best intentions, are prone to inconsistency. A developer might be more lenient on a Friday afternoon or more strict early Monday morning. Personal relationships, fatigue, and varying expertise levels all contribute to inconsistent code review outcomes.

AI Code Review tools, by contrast, apply the same standards consistently across all code changes. They don't have bad days, don't play favorites, and don't get tired. This consistency is particularly valuable in large teams where multiple reviewers might apply different standards to similar code changes.

Comprehensive Coverage of Known Issues

AI systems excel at identifying patterns they've been trained to recognize. They can simultaneously check for hundreds of potential issues, from simple style violations to complex security vulnerabilities. For example, ai code review tools can instantly identify SQL injection vulnerabilities, cross-site scripting risks, and buffer overflow conditions—issues that human reviewers might miss under time pressure.

Scalability Across Large Codebases

As codebases grow in size and complexity, human code review becomes increasingly challenging. AI Code Review tools can scale to handle massive repositories without proportional increases in review time. They can analyze the impact of changes across entire codebases, identifying potential ripple effects that human reviewers might not catch.

The Limitations: Where Human Intelligence Remains Irreplaceable

Contextual Understanding and Business Logic

While AI systems excel at pattern recognition, they often struggle with understanding the broader business context of code changes. For instance, an AI Code Review tool might flag a piece of code as inefficient without understanding that it's intentionally written to handle a specific edge case related to the business domain.

Human reviewers bring domain expertise and understanding of business requirements that AI currently cannot replicate. They can evaluate whether a code change aligns with the overall architecture vision or business objectives.

Creative Problem-Solving and Alternative Approaches

Human reviewers don't just identify problems—they often suggest creative solutions or alternative approaches. They might recommend a completely different algorithm, suggest refactoring opportunities, or propose architectural improvements that go beyond the immediate code change.

AI Code Review tools, while increasingly sophisticated, typically focus on identifying issues rather than proposing innovative solutions. They might suggest fixing a bug but won't recommend a fundamental redesign that could prevent entire classes of similar bugs.

False Positive Challenges

Despite significant improvements, AI systems still generate false positives—flagging code as problematic when it's actually correct. A study by the Software Engineering Institute found that even advanced AI Code Review tools had false positive rates ranging from 15% to 30%, depending on the type of analysis performed.

These false positives can be particularly problematic because they can lead to "alert fatigue," where developers start ignoring AI recommendations altogether. This phenomenon undermines the effectiveness of the entire system.

Understanding of Team Dynamics and Knowledge Transfer

Code review serves purposes beyond just catching bugs—it's also a mechanism for knowledge transfer and team collaboration. Human reviewers can identify opportunities for mentoring, suggest learning resources, or explain complex concepts to junior developers.

AI Code Review tools, while they can identify technical issues, cannot replicate the human aspects of code review that contribute to team development and knowledge sharing.

How Is AI Code Review Transforming Different Industries and What Challenges Does It Present?

The impact of AI Code Review extends far beyond individual development teams, creating ripple effects across entire industries and reshaping the software development landscape. Let me examine both the transformative benefits and the challenges this technology presents.

Positive Transformations Across Industries

Financial Services: Enhanced Security and Compliance

The financial sector has been one of the early adopters of AI Code Review technology, driven by stringent regulatory requirements and the critical need for secure code.

Code review ai tools in financial services excel at identifying compliance violations, such as inadequate data encryption or improper handling of personally identifiable information (PII). For example, Bank of America's implementation of AI code review has helped them maintain compliance with regulations like PCI DSS and SOX by automatically flagging code that might violate these standards.

Healthcare: Ensuring Patient Safety Through Code Quality

In healthcare, where software bugs can literally be life-threatening, AI Code Review has become a critical safety mechanism.

The FDA's recent guidance on software as medical devices has made rigorous code review mandatory for many healthcare applications. AI Code Review tools help healthcare organizations meet these requirements by providing comprehensive analysis of medical software code, identifying potential failure modes that could compromise patient safety.

Automotive: Supporting the Autonomous Vehicle Revolution

The automotive industry's transition to software-defined vehicles has created unprecedented code review challenges. Tesla, Waymo, and other autonomous vehicle manufacturers process millions of lines of code for their self-driving systems. Traditional human code review simply cannot scale to handle this volume.

AI Code Review tools in automotive applications focus heavily on real-time systems analysis, identifying potential timing issues, memory leaks, and other problems that could cause system failures. A study by the Automotive Safety Integrity Level (ASIL) consortium found that AI-augmented code review reduced critical safety issues by 60% compared to human-only review processes.

Challenges and Negative Impacts

Job Market Disruption in Quality Assurance

The automation capabilities of AI Code Review have created legitimate concerns about job displacement in software quality assurance roles. Junior developers who previously spent significant time on manual code review are finding their roles evolving rapidly.

However, data from the Bureau of Labor Statistics suggests that while the nature of QA jobs is changing, the overall demand for skilled developers remains strong. The key is adaptation—professionals who learn to work alongside AI tools are finding new opportunities in AI system training, validation, and advanced code analysis.

Over-Reliance and Skill Degradation

A concerning trend in some organizations is the over-reliance on AI Code Review tools, leading to a degradation of human code review skills. When developers become too dependent on AI tools, they may lose the ability to perform critical analysis independently.

This challenge is particularly acute for junior developers who may never develop strong manual code review skills if they rely too heavily on AI from the beginning of their careers. Organizations need to balance AI adoption with human skill development.

Integration Complexity and Technical Debt

Implementing AI Code Review systems often requires significant changes to existing development workflows. Organizations may need to modify their continuous integration pipelines, retrain development teams, and integrate multiple AI tools with existing systems.

The complexity of these integrations can create technical debt if not managed properly. Some organizations have found themselves locked into specific AI vendor ecosystems, making it difficult to switch tools or adapt to new technologies.

Sector-Specific Challenges

Startups and Resource Constraints

While large enterprises can afford comprehensive AI Code Review solutions, startups often struggle with the cost and complexity of implementation. Many ai code review tools are priced for enterprise customers, making them inaccessible to smaller organizations.

This creates a potential competitive disadvantage for startups that cannot afford the same level of automated code quality assurance as their larger competitors. However, the emergence of open-source AI code review tools is beginning to address this challenge.

Legacy System Integration

Organizations with significant legacy codebases face unique challenges when implementing AI Code Review systems. These older systems may use deprecated languages, non-standard coding practices, or proprietary frameworks that AI tools struggle to understand.

The process of training AI models to understand legacy code can be time-consuming and expensive, requiring organizations to weigh the benefits of AI code review against the costs of implementation.

What Ethical Concerns Does AI Code Review Raise and How Should We Address Them?

The rapid adoption of AI Code Review technology has introduced a complex web of ethical considerations that the software development community must carefully navigate. These concerns go beyond simple technical implementation issues and touch on fundamental questions about intellectual property, privacy, and professional responsibility.

Intellectual Property and Code Ownership Dilemmas

Training Data and Copyright Infringement

One of the most significant ethical challenges facing AI Code Review systems stems from their training data. Many AI models are trained on vast repositories of open-source code, including GitHub repositories, Stack Overflow posts, and other publicly available code samples. This raises fundamental questions about intellectual property rights and fair use.

Derivative Work and Attribution

AI Code Review tools often suggest specific code changes or improvements. If these suggestions are based on patterns learned from existing codebases, they may constitute derivative works under copyright law. This creates potential liability for organizations using these tools, particularly in commercial software development.

The challenge becomes even more complex when AI tools suggest code that closely resembles existing implementations. Developers and organizations may unknowingly incorporate code suggestions that violate licensing agreements or copyright protections.

Privacy and Data Security Concerns

Code Exposure and Confidentiality

Many AI Code Review services operate in the cloud, requiring organizations to upload their source code to external servers for analysis. This creates significant privacy and security risks, particularly for organizations working on proprietary software or handling sensitive data.

Financial institutions, defense contractors, and healthcare organizations face particular challenges in this regard. Their code may contain trade secrets, security algorithms, or other sensitive information that cannot be safely shared with third-party AI services.

Data Retention and Access Policies

The policies governing how ai code review tools store, process, and potentially share uploaded code vary significantly between providers. Some services retain code indefinitely for model training purposes, while others promise to delete code after analysis. However, the technical reality of "deletion" in distributed cloud systems is often more complex than these policies suggest.

Organizations must carefully evaluate the data handling practices of AI Code Review providers and ensure compliance with their own security policies and regulatory requirements.

Bias and Fairness in Code Analysis

Training Data Bias

AI models inherit the biases present in their training data. If AI Code Review systems are trained primarily on code written by certain demographic groups or in specific programming paradigms, they may exhibit bias against different coding styles or approaches.

This bias can manifest in various ways, from preferring certain naming conventions to favoring specific algorithmic approaches. Such biases can perpetuate existing inequalities in software development and limit the diversity of acceptable coding practices.

Cultural and Linguistic Bias

Code comments, variable names, and documentation often reflect the cultural and linguistic background of their authors. AI Code Review tools trained primarily on English-language code may struggle with or unfairly penalize code that includes non-English comments or follows naming conventions from other languages.

This bias can create barriers for international development teams and reinforce the dominance of English-language programming practices, potentially stifling innovation and diversity in software development.

Professional Responsibility and Accountability

Liability for AI-Generated Recommendations

When AI Code Review tools provide incorrect or harmful recommendations, questions arise about liability and responsibility. If an AI tool fails to identify a critical security vulnerability, or if it suggests a change that introduces new bugs, who bears responsibility for the resulting problems?

The legal and professional frameworks for handling AI-generated recommendations in software development are still evolving. Organizations must carefully consider how to allocate responsibility between human developers and AI tools.

Transparency and Explainability

Many AI Code Review systems operate as "black boxes," providing recommendations without clear explanations of their reasoning. This lack of transparency makes it difficult for developers to understand why certain suggestions are made or to evaluate their validity.

The European Union's AI Act and similar regulations are beginning to require greater transparency in AI systems, particularly those used in high-risk applications. Software development organizations may need to ensure that their AI Code Review tools meet these transparency requirements.

How Can We Effectively Integrate AI Code Review While Mitigating Risks?

Successfully integrating AI Code Review into software development workflows requires a strategic approach that maximizes benefits while addressing the challenges and ethical concerns I've outlined. Based on industry best practices and real-world implementation experiences, here are comprehensive strategies for effective AI code review adoption.

Addressing Industry Disruption Through Strategic Implementation

Hybrid Human-AI Review Processes

Rather than replacing human reviewers entirely, the most successful implementations of AI Code Review create hybrid processes that leverage the strengths of both humans and AI. Organizations like Google and Microsoft have developed tiered review systems where AI handles initial screening and routine issues, while humans focus on complex architectural decisions and business logic validation.

This approach addresses the job displacement concerns I mentioned earlier by evolving rather than eliminating human roles. Senior developers can focus on high-value activities like mentoring, architectural design, and complex problem-solving, while AI Code Review tools handle routine quality checks and standard compliance verification.

Skill Development and Training Programs

Organizations should invest in comprehensive training programs that help developers work effectively with AI tools. This includes understanding AI limitations, learning to interpret AI recommendations critically, and developing skills in AI system configuration and tuning.

Mitigating Ethical Risks Through Governance Frameworks

Implementing Data Governance Policies

Organizations must establish clear policies governing how AI Code Review tools access, process, and store code. This includes implementing data classification systems that determine which code can be processed by cloud-based AI services and which must remain on-premises.

Establishing AI Ethics Committees

Leading organizations are creating AI ethics committees specifically focused on development tool usage. These committees evaluate new AI Code Review tools, establish usage guidelines, and monitor for potential bias or ethical issues.

The committee structure should include representatives from development, security, legal, and business teams to ensure comprehensive evaluation of AI tool impacts. Regular audits of AI recommendations can help identify and address bias issues before they become systemic problems.

Technical Solutions for Common Challenges

Gradual Implementation Strategies

Rather than implementing AI Code Review across entire organizations simultaneously, successful adoptions typically follow a phased approach. Start with non-critical projects or specific types of analysis (like security scanning or style checking) before expanding to comprehensive code review.

This gradual approach allows organizations to build expertise, refine processes, and address integration challenges without risking critical projects. It also provides opportunities to measure AI tool effectiveness and adjust implementation strategies based on real-world results.

Multi-Tool Integration Approaches

No single AI Code Review tool excels at all types of analysis. Successful implementations often combine multiple AI tools, each specialized for different aspects of code review. For example, using specialized security analysis tools alongside general-purpose code quality tools.

Addressing Privacy and Security Concerns

On-Premises and Hybrid Deployment Options

For organizations with strict security requirements, deploying AI Code Review tools on-premises or in private cloud environments addresses many privacy concerns. While this approach may require additional infrastructure investment, it provides complete control over code and data.

Hybrid deployment models, where less sensitive code is processed in the cloud while critical code remains on-premises, offer a balanced approach that maintains security while leveraging cloud-based AI capabilities.

Vendor Due Diligence and Contractual Protections

Organizations should conduct thorough due diligence on AI Code Review vendors, including security audits, compliance certifications, and data handling practice reviews. Contractual agreements should include specific provisions for data protection, retention limits, and breach notification requirements.

Regular vendor assessments ensure that AI code review providers maintain appropriate security standards and comply with evolving regulatory requirements.

Measuring Success and Continuous Improvement

Establishing Key Performance Indicators

Successful AI Code Review implementations require clear metrics for measuring effectiveness. These might include bug detection rates, false positive percentages, time-to-review metrics, and developer satisfaction scores.

Organizations should establish baseline measurements before AI implementation and track improvements over time. This data-driven approach helps justify AI investments and identify areas for improvement.

Feedback Loops and Model Improvement

AI Code Review systems should include mechanisms for collecting developer feedback on AI recommendations. This feedback can be used to improve AI model accuracy and reduce false positives over time.

Some organizations have implemented "AI recommendation rating" systems where developers can quickly indicate whether AI suggestions were helpful, irrelevant, or incorrect. This feedback helps continuously improve AI system performance.

FAQs About AI Code Review

Q: How accurate are AI Code Review tools compared to human reviewers?

The accuracy of AI Code Review tools varies significantly depending on the type of analysis being performed. For well-defined issues like syntax errors, security vulnerabilities, and coding standard violations, modern AI tools can achieve accuracy rates of 85-95%. However, for complex architectural decisions and business logic validation, human reviewers remain significantly more accurate. The key is understanding that AI and human review serve complementary rather than competing roles.

Q: What's the cost difference between AI Code Review and traditional human review?

While AI Code Review tools require upfront investment in software licenses and integration, they typically provide significant cost savings over time. Studies suggest that AI tools can reduce code review time by 40-60% for routine issues, freeing human reviewers to focus on higher-value activities. However, organizations should factor in training costs, tool maintenance, and the need for ongoing human oversight when calculating total cost of ownership.

Q: What are the data privacy implications of using cloud-based AI Code Review services?

Cloud-based AI Code Review services require uploading your source code to external servers, which creates potential privacy and security risks. Organizations handling sensitive or proprietary code should carefully evaluate vendor security practices, data retention policies, and compliance certifications. For highly sensitive projects, consider on-premises or hybrid deployment options.

Conclusion: Embracing the Future of Intelligent Code Review

Gone are the days when static analysis alone could keep up. Today’s AI-driven platforms bring machine learning muscle to the table—analyzing code at lightning speed, enforcing consistent standards, and spotting subtle issues that humans often miss. Yet, this power comes with its own set of complexities: ethical implications, integration challenges, and the danger of over-reliance must all be carefully managed.

The real strength of AI Code Review lies not in replacing developers, but in amplifying their abilities. Smart teams are already blending AI automation with human insight—using algorithms to handle repetitive checks, while engineers focus on architecture, edge cases, and innovation.

Looking forward, we’re on the cusp of even greater transformation. With advances in large language models, semantic code understanding, and automated testing, the next generation of review tools will be faster, smarter, and more intuitive than ever. But with greater power comes greater responsibility.

Organizations that succeed with AI Code Review won’t just adopt the tools—they’ll build thoughtful strategies around them. That means setting clear ethical guardrails, investing in team readiness, and prioritizing synergy between human judgment and machine precision. In this new era, the goal isn’t to replace reviewers—it’s to elevate what’s possible.

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts! Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

Submit Your AI Tool For FREE!Showcase Your Innovation To Thousands Of AI Enthusiasts!

No comments yet. Be the first to comment!